'Smart' Rovers Are the Future of Planetary Exploration

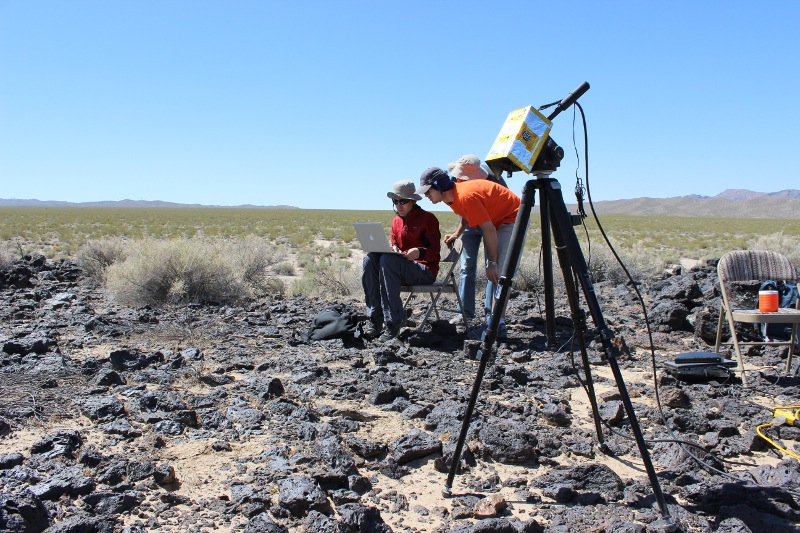

Researchers supported by NASA's Astrobiology Science and Technology Instrument Development (ASTID) program are designing algorithms and instruments that could help future robotic missions make their own decisions about surface sites to explore on other planets.

One such instrument is the TextureCam, which is currently being tested with Mars in mind. The technology will improve the efficiency of planetary missions, allowing rovers to collect more data and perform more experiments in less time, scientists say.

Getting the most for your money

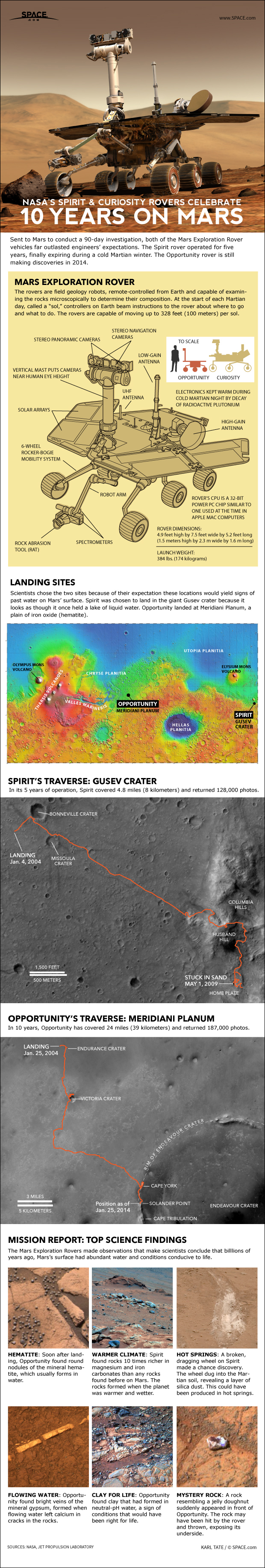

Robotic explorers are designed to be as tough as possible, and they can survive for a long time, even in some of the most extreme conditions the solar system has to offer. For instance, the Mars Exploration Rover (MER) Opportunity landed on Mars in 2004 for what was scheduled to be a three-month mission. A decade later, the robust explorer is still driving across the surface of the Red Planet and collecting valuable data. [10 Amazing Mars Discoveries by Spirit & Opportunity]

However, Opportunity isn't the norm. Its sister rover, Spirit, also kept going and going for an impressive six years, but it has been silent on Mars since March of 2010. And consider the Huygens lander, which parachuted down to the surface of Saturn's moon Titan in 2005. After spending more than six years in transit from Earth to the saturnian moon, Huygens' trip through Titan's dense atmosphere lasted only two and half hours. Then there's the Soviet Venus lander, Venera 7, which was only able to send back 23 minutes of data before it was destroyed by the harsh venusian environment.

Without mechanics and engineers around to rescue them, no robotic explorer can survive on a distant planet forever.

Robotic missions take an incredible amount of time and effort to build, launch and operate. Hundreds (or sometimes thousands) of people spend years of their lives piecing missions together. This, coupled with the limited lifespan of a robot, means that every second it spends during its mission is incredibly valuable — and scientists want to get the most for their money.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

On-the-clock maneuvers

Driving a rover around on another planet is an extremely complicated process. Basically, the robot takes a picture of the landscape in front of it, and then transmits the image back to Earth. Teams of scientists pore over the image looking for interesting sites where the rover can collect data.

Then, mission planners have to decide a safe route for the rover to follow, mapping every little pebble, rock or towering cliff face that might get in its way.

Commands are sent to the rover that explain exactly how it will get from point A to point B.

The rover begins to drive… and everyone on Earth holds his or her breath. Any mistake on the part of the scientists and mission planners, and the rover might go tumbling down a crater wall.

When the rover finishes driving, it takes another picture and sends it home so that the mission team can see whether or not the drive was successful — and then they start planning where to go next.

This process is like taking baby steps across the surface, and it eats up a lot of time. It also means that the rover can't actually travel very far each day, because each step it takes needs to be meticulously planned and translated into commands. This is compounded by the fact that messages can take 20 minutes to travel between the Earth and Mars (as an example), and bandwidth limitations currently limit the number of messages that can be sent.

"It's important to note that communications with planetary spacecraft typically happen just once or twice per day, and in the meantime they normally execute scripted command sequences," said David Thompson, principal investigator on TextureCam. "Adding rudimentary onboard autonomy lets the rover adapt its actions to new data."

When the rover is able to make some decisions on its own, or identify specific targets of interest, it can greatly speed the exploration process along.

"Roughly speaking, instead of telling the rover to 'drive over the hill, turn left 90 degrees and take a picture,' you might tell it to 'drive over the hill and take pictures of all the rocks you see,'" Thompson explained.

In September of 2013, NASA's Curiosity rover, the centerpiece of the Mars Science Laboratory (MSL) mission, made its longest single-day drive (up until that point). The rover dashed a total 464 feet (141.5 meters).

But even though Curiosity is the most advanced rover to touch down on Mars, it's no sprinter. A child under eight, for example, can travel farther in 20 seconds than Curiosity can in an entire day (the U.S. sprinting record for a child under age eight is more than 200 meters in 28.2 seconds). Though the analogy is imprecise, it's safe to say that current robots are still severely limited in mobility when compared to their human counterparts. [Latest Amazing Mars Photos from the Curiosity Rover]

"This will be particularly valuable for rover astrobiology missions involving wide-area surveys (seeking rare evidence of habitability)," Thompson said. "Here, efficiency improvements can be really enabling since they let us survey faster and visit more locations over the lifetime of the spacecraft."

Robotic field assistants

Autonomous techniques on past and current missions have already helped capture opportunistic scientific data that would have otherwise been missed. One example is images of dust devils on the surface of Mars that were captured by the Mars Exploration Rovers.

"Over time, spacecraft have been getting smarter and more autonomous with respect to both mobility and science data understanding," Thompson said. "This process is making them more active exploration partners, and making it more efficient to collect good quality data."

Curiosity is more autonomous than any other rover yet built for space exploration, and is able to perform some of its own navigation without commands from Earth. When engineers tell the rover where to drive, the rover itself uses software to figure out how to navigate obstacles and travel from A to B.

However, Curiosity doesn't set its own agenda. Teams of scientists are still required to examine images and select scientific targets. This means the rover has to go slowly, allowing human eyes time to examine the surroundings and look for anything of interest. [Curiosity Drives Itself With Autonav Tech (Video)]

Thompson and his team at NASA's Jet Propulsion Laboratory (JPL) in Pasadena, Calif., are working on some clever ways to further automate planetary rovers by allowing the robots to select scientifically interesting sites on their own. This involves 'smart' instruments on the rover — instruments that can 'think' for themselves.

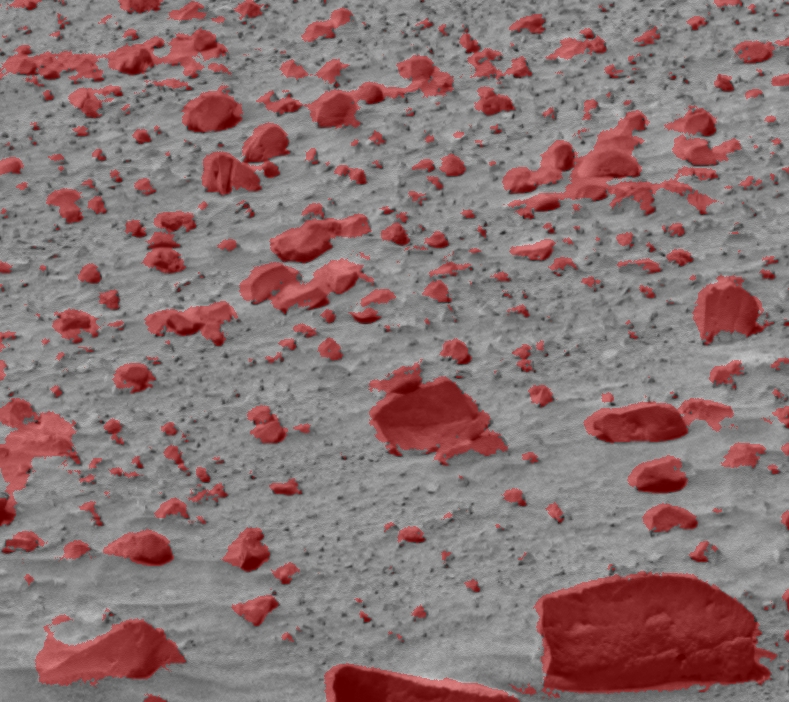

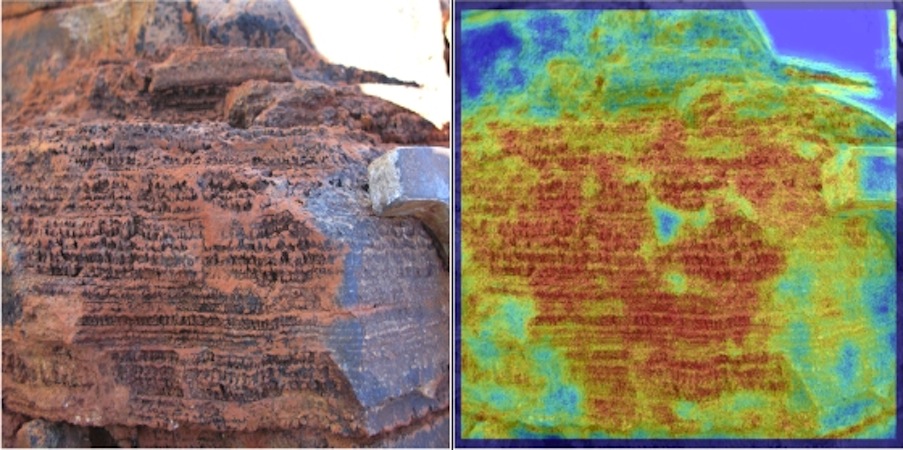

They are currently developing an instrument called TextureCam, which can pick out geologically interesting rocks all by itself. It works by classifying pixels in an image to identify variations in the texture of rocks. When TextureCam spots something that looks interesting, it knows right away that it's OK to get a bit snap-happy with its camera.

By taking extra pictures and sending them back to Earth, scientists can immediately begin to assess whether or not the rock is a good target for extended study rather than taking extra days out of the rover's schedule to collect the additional images.

The rover then becomes a more efficient assistant for humans on Earth, giving them more time to concentrate on the science rather than the logistics of exploration.

Future paths

The technology behind TextureCam could play a major role in astrobiology research on the surface of planets like Mars. According to Thompson, the team is working on methods for uploading the algorithms they've developed to the Mars Science Laboratory, possibly as part of an extended mission. Opportunities to demonstrate the technology could also come with NASA's planned Mars 2020 rover. But Mars isn't the only place where their work could be applied.

"We think there's value for a wide range of missions. Science autonomy is a concept that applies to any instrument," Thompson said. "It can benefit missions whenever there are restricted bandwidth communications, or transient events that require immediate action from the spacecraft."

Thompson even has some specific ideas about where this technology might be useful in our solar system.

"The general idea of onboard science data understanding could apply to other planetary exploration scenarios," Thompson said. "It could be useful for short mission segments, such as a future Venus landing or a deep space flyby, which happen too quickly for a communications cycle with ground control."

"Speculating a bit," Thompson continued, "autonomy could also be useful for scenarios like Titan boats and balloons if they travel long distances between communications."

The algorithms that are being developed by the team could also have a number of applications in areas closer to home. [The 6 Strangest Robots Ever Created]

"NASA Earth science missions might benefit from this research too," explained Thompson. "For example, typically over half of the planet is covered by clouds, which complicates remote sensing by orbital satellites. One can save downlink bandwidth by excising these clouded scenes onboard, or — when the spacecraft is capable — aiming the sensor at the cloud-free areas."

This automated cloud detection could be useful in detecting weather patterns of interest to climatologists and meteorologists.

"We're currently investigating the use of onboard image analysis to recognize clouds and terrain," Thompson said. "Specifically, we're running experiments onboard the IPEX cubesat They are the same algorithms we use for autonomous astrobiology, but they turned out to be quite useful for Earth missions as well."

Earth technology for space

In space science, there are many examples of how technologies developed for space cross over into our everyday lives here on Earth – from Velcro to laptop computers. TextureCam is actually a good example of how this technology crossover can also happen in the other direction.

"It bears mention that the computer-vision strategies we're using are similar to object recognition methods used by commercial sensors and robots," Thompson said.

Many industries, and even household objects, are using similar technologies to automate various processes, like manufacturing or vacuuming our living room carpets.

"The idea of using machine learning for image analysis is not a new one," Thompson said. "It's great that we can leverage some of these ideas for NASA planetary missions."

This story was provided by Astrobiology Magazine, a web-based publication sponsored by the NASA astrobiology program.

Follow us @Spacedotcom, Facebook or Google+. Published on SPACE.com.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Dr. Aaron Gronstal is the lead graphic artist and science writer of the NASA Astrobiology Program. He was a previous writer for Space.com covering planetary science and the search for life. A comics and graphic novels lover, Aaron started his career with NASA as a geomicrobiologist and is now expanding his role in science communication for astrobiology as a writer and graphic artist. He's well known for creating the Astrobiology graphic novel history series which reach out to a whole new audience of space and science-minded fans.