How Scientists Tackle NASA's Big Data Deluge

Every hour, NASA's missions collectively compile hundreds of terabytes of information, which, if printed out in hard copies, would take up the equivalent of tens of millions of trees worth of paper.

This deluge of material poses some big data challenges for the space agency. But a team at NASA's Jet Propulsion Laboratory (JPL) in Pasadena, Calif., is coming up with new strategies to tackle problems of information storage, processing and access so that researchers can harness gargantuan amounts data that would impossible for humans to parse through by hand.

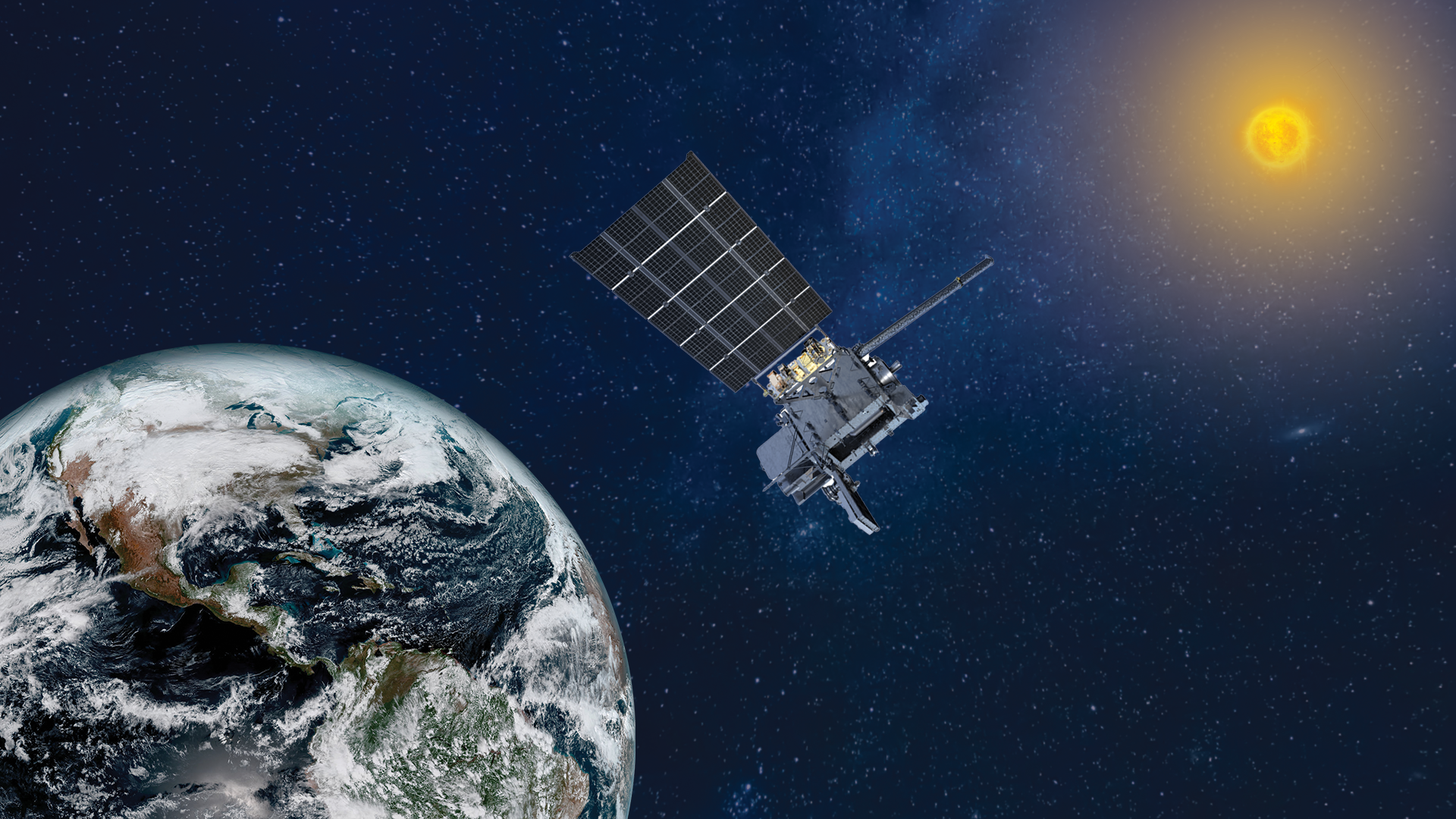

"Scientists use big data for everything from predicting weather on Earth to monitoring ice caps on Mars to searching for distant galaxies," JPL's Eric De Jong said in a statement. Jong is the principal investigator for one of NASA's big data programs, the Solar System Visualization project, which aims to convert the scientific information gathered in missions into graphics that researchers can use.

"We are the keepers of the data, and the users are the astronomers and scientists who need images, mosaics, maps and movies to find patterns and verify theories," Jong explained. For example, his team makes movies from data sets like the 120-megapixel photos by NASA's Mars Reconnaissance Orbiter during its surveys of the Red Planet.

But even just archiving big data for some of NASA's missions and other international projects can be daunting. The Square Kilometer Array, or SKA, for example, is a planned array of thousands of telescopes in South Africa and Australia, slated to begin construction in 2016. When it goes online, the SKA is expected to produce 700 terabytes of data each day, which is equivalent to all the data racing through the Internet every two days.

JPL researchers will help archive this flood of information. And big data specialists at the center say they are using existing hardware, developing cloud computing techniques and adapting open source programs to suit their needs for projects like the SKA instead of inventing new products.

"We don't need to reinvent the wheel," Chris Mattmann, a principal investigator for JPL's big data initiative, said in a statement. "We can modify open-source computer codes to create faster, cheaper solutions."

NASA's big data team is also devising new ways to make this archival info more accessible and versatile for public use.

"If you have a giant bookcase of books, you still have to know how to find the book you're looking for," Steve Groom, of NASA's Infrared Processing and Analysis Center at the California Institute of Technology, explained in a statement.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Groom's center manages data from several NASA astronomy missions, including the Spitzer Space Telescope, the Wide-field Infrared Survey Explorer (WISE).

"Astronomers can also browse all the 'books' in our library simultaneously, something that can't be done on their own computers," Groom added.

Follow Megan Gannon on Twitter and Google+. Follow us @SPACEdotcom, Facebook or Google+. Originally published on SPACE.com.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Megan has been writing for Live Science and Space.com since 2012. Her interests range from archaeology to space exploration, and she has a bachelor's degree in English and art history from New York University. Megan spent two years as a reporter on the national desk at NewsCore. She has watched dinosaur auctions, witnessed rocket launches, licked ancient pottery sherds in Cyprus and flown in zero gravity on a Zero Gravity Corp. to follow students sparking weightless fires for science. Follow her on Twitter for her latest project.