Stop Complaining about 'Fake' Colors in NASA Images

Paul Sutter is an astrophysicist at The Ohio State University and the chief scientist at the COSI science center. Sutter is also host of Ask a Spaceman, RealSpace and COSI Science Now.

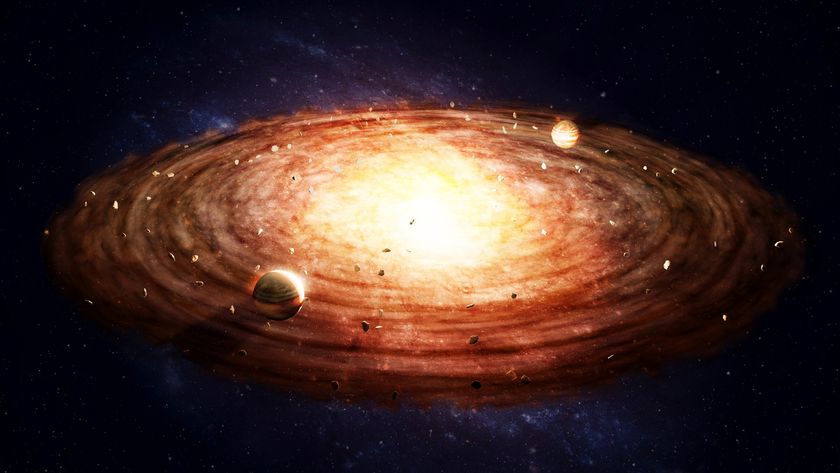

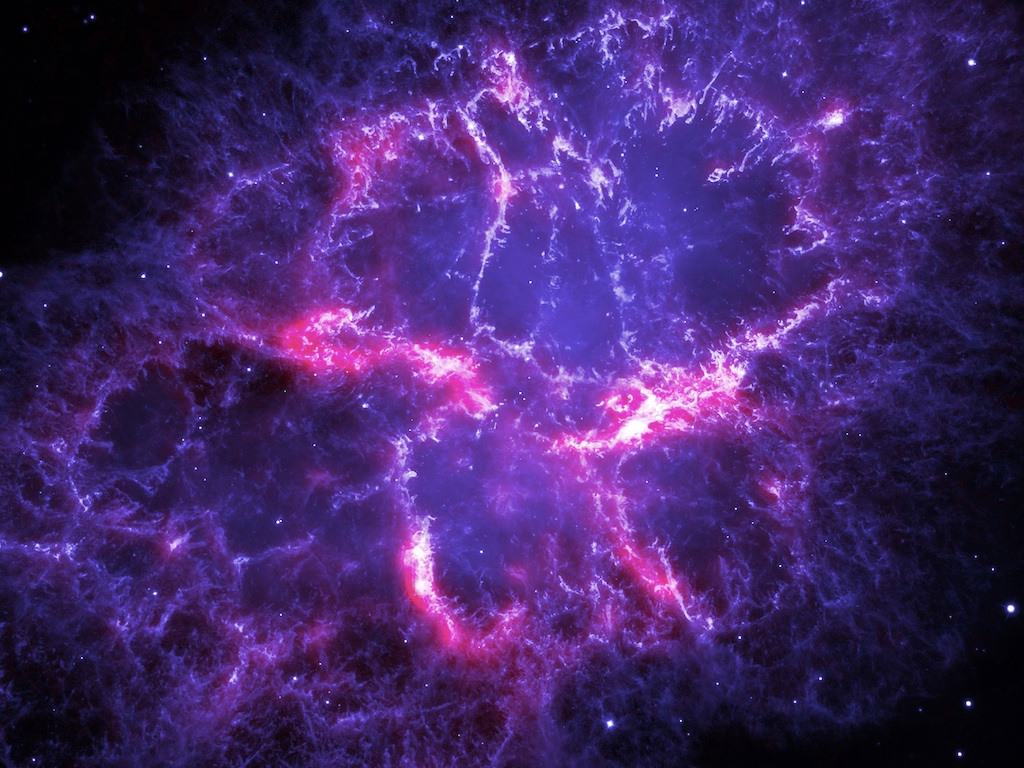

We hear it all the time. Well, maybe you don't, but I get this thrown at me a lot. We see beautiful images released by NASA and other space agencies: ghostly nebulas giving tantalizing hints of their inner structures, leftover ruins of long-dead stellar systems, furious supernovae caught in the act of exploding and newborn stars peeking out from their dusty wombs.

Instead of just sitting back, relaxing and enjoying the light show the universe is putting on, some people feel compelled to object: But those colors are fake! You wouldn't see that nebula with your eyes! Binoculars and telescopes wouldn't reveal that supernova structure! Nothing in the universe is that shade of purple! And so on. [Celestial Photos: Hubble Space Telescope's Latest Cosmic Views]

A light bucket

I think it's first important to describe what a telescope is doing, especially a telescope with a digital camera attached. The telescope itself is an arrangement of tubes, mirrors and/or lenses that enable the instrument to capture as much light as possible. Obviously, it pulls in much more light than the human eye does, or it wouldn't be very good at what it was built to do. So, naturally, telescopes will see really faint things — things you'd never see with your eyes unless you hitched a ride on a wandering rogue exoplanet and settled in for a million-year cruise.

A telescope's second job is to shove all those astronomical photons into a tiny spot that can fit into your iris; otherwise, it would just dump the light on your whole face, which wouldn't be very interesting or useful. That act of focusing also magnifies images, making them appear much larger than in real life.

So, already, a telescope is giving you an artificial view of the heavens.

Your retinas have special sensors (aka, rods and cones) that can pick out different colors. But digital sensors — like the one you might use to take a selfie — aren't sensitive to colors at all. They can only measure the total amount of light slamming into them. So to correct for this, they use filters, and either employ multiple sets of sensors or combine multiple readings from the same sensor.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Either way, the result is the same: an avalanche of data about the properties of the light that hit the device at the same moment you were taking your picture. Fancy software algorithms reconstruct all this data into an image that kinda, sorta approximates what your eyes would've seen without the digital gear.

But as anyone who has had to fiddle with exposure and lighting settings knows, it's far from a one-to-one, human-computer match.

Doing science

If you've ever played with filters before posting a selfie, you're doing it for a reason: You want the picture to look better.

Scientists want pictures to look better, too — for the sake of science. Researchers take pictures of stuff in space to learn about how it works, and some higher contrast here or a little brightening over there can help us understand complex structures and relationships within and between them.

So don't blame NASA for a little photo enhancement touching up; they're doing it for science. [NASA's 10 Greatest Science Missions ]

The colors of the universe

But what about adding colors? If one had to do a census, perhaps the most common colors in the universe are red and blue. So if you're looking at a gorgeous Hubble Space Telescope image and see lots of those two colors, it's probably close to what your unaided eye would see.

But a broad wash of green? A sprinkling of bright orange? Astrophysical mechanisms don't usually produce colors like that, so what's the deal?

The deal is, again, science. Researchers will often add artificial colors to pick out some element or feature that they're trying to study. Elements when they're heated will glow in very specific wavelengths of light. Sometimes that light is within human perception but will be washed out by other colors in the picture, and sometimes the light's wavelength is altogether beyond the visible.

But in either case, we want to map out where that element is in a particular nebula or disk. So scientists will highlight that feature to get clues to the origins and structure of something complex. "Wow, that oxygen-rich cloud is practically wrapped around the disk! How scientifically fascinating!" You get the idea.

[Watch this video where I talk more about colorizing astronomical images.]

Superhero senses

Ever since William Herschel accidentally discovered infrared radiation, scientists have known that there's more to light than … light. Redder than the deepest reds gives you infrared, microwaves and radio. Violet-er than the deepest violet gives you ultraviolet, plus X-rays and gamma-rays.

Scientists have telescopes to detect every kind of electromagnetic radiation there is, from tiny bullet-like gamma-rays to radio waves that are meters across. The telescope technologies are pretty much always the same, too: collect light in a bucket, and focus it into a central spot.

So, of course, scientists would like to make a map. After all, we did spend quite a bit of money to build the telescope. But what color is a gamma-ray that comes from a distant supernova? What hue is a radio emission from an active galaxy? We need to map all this data onto something palatable to human senses, and we do that by assigning artificial colors to the images.

Without that, we wouldn't be able to actually do science.

Learn more by listening to the episode "How do we see beyond the visible?" on the Ask a Spaceman podcast, available on iTunes and on the Web at http://www.askaspaceman.com. Thanks to Elizabeth M. for the question that led to this piece! Ask your own question on Twitter using #AskASpaceman or by following Paul @PaulMattSutter and facebook.com/PaulMattSutter. Follow us @Spacedotcom, Facebook or Google+. Originally published on Space.com.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Paul M. Sutter is an astrophysicist at SUNY Stony Brook and the Flatiron Institute in New York City. Paul received his PhD in Physics from the University of Illinois at Urbana-Champaign in 2011, and spent three years at the Paris Institute of Astrophysics, followed by a research fellowship in Trieste, Italy, His research focuses on many diverse topics, from the emptiest regions of the universe to the earliest moments of the Big Bang to the hunt for the first stars. As an "Agent to the Stars," Paul has passionately engaged the public in science outreach for several years. He is the host of the popular "Ask a Spaceman!" podcast, author of "Your Place in the Universe" and "How to Die in Space" and he frequently appears on TV — including on The Weather Channel, for which he serves as Official Space Specialist.