NASA's HoloLens Demo Puts Researchers on Mars, Space Station and Workbench

NEW YORK — Visitors from NASA's Jet Propulsion Lab (JPL) walked on Mars, explored a 3D prototype and even dangled a rover over the audience's head during an augmented reality demo and talk at New York University's Tandon School of Engineering MakerSpace event space in Brooklyn Nov. 7.

Matthew Clausen, creative director of JPL's Ops Lab, joined Marijke Jorritsma, an NYU graduate student and intern at JPL, to demonstrate the research and exploration capabilities of the Microsoft HoloLens, a headset that can project virtual images — from the surface of Mars to a repair schematic — over the real world.

The two presenters wore HoloLens headsets to present the work, and the virtual viewpoint was projected on a screen behind them so the audience members could follow along. [Virtual Reality and Mars: 4 Ways Tech Will Change Space Exploration]

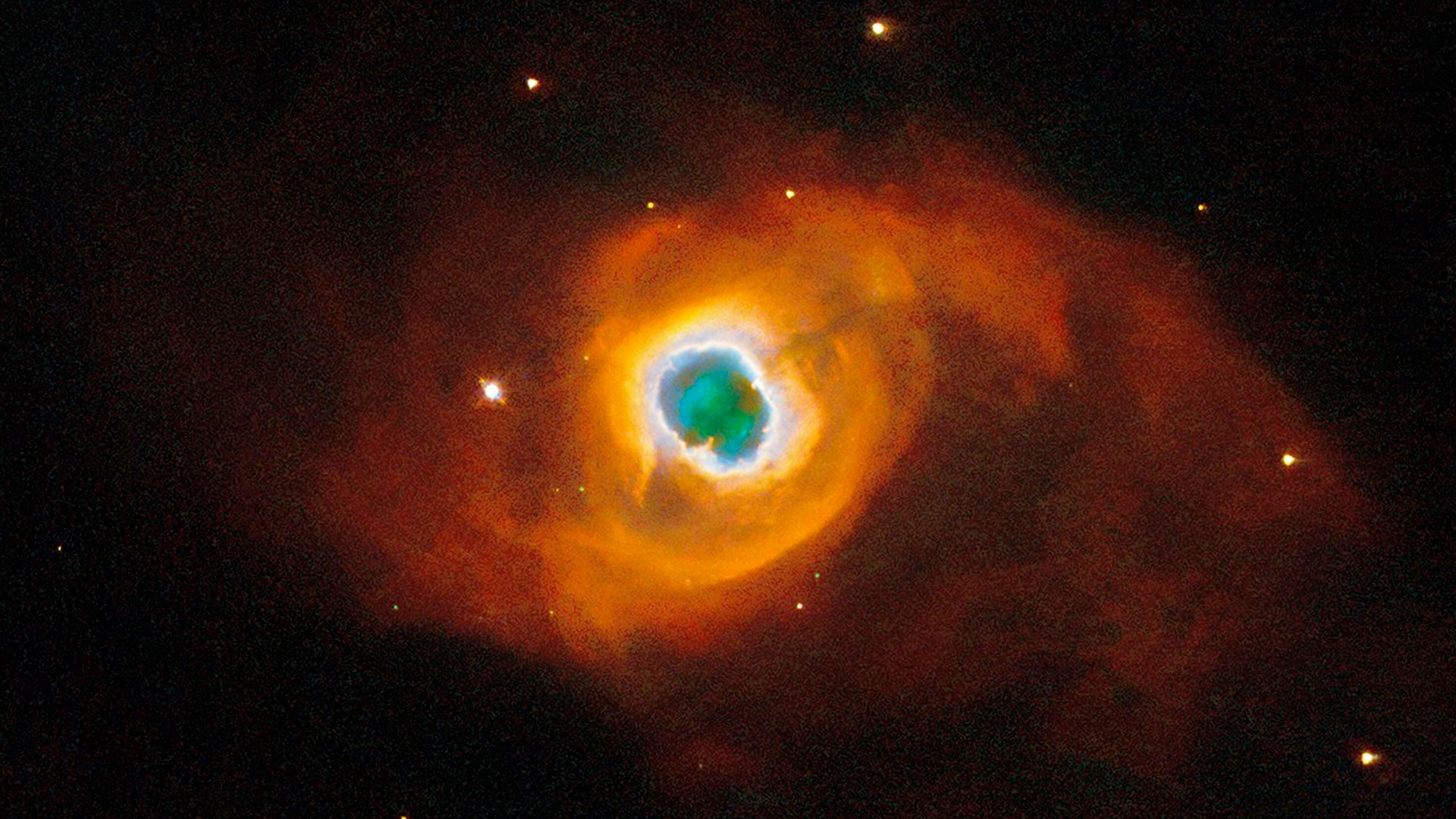

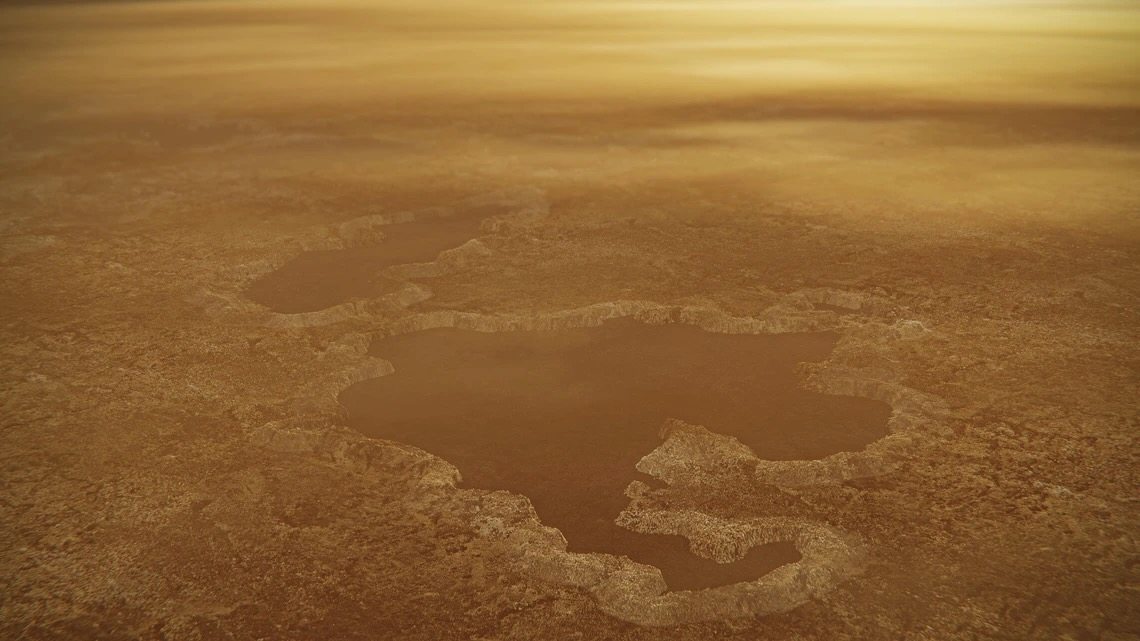

They first presented OnSight, a virtual reconstruction of the Martian surface that researchers can work in collaboratively — for instance, to set a course and targets for the Curiosity Mars rover. The technology was recently rolled out at Kennedy Space Center, where visitors can get a tour of Mars from a holographic Buzz Aldrin.

Before now, researchers have plotted Martian locations based on long, flat panoramas of the surface taken by rovers. Clausen's group found that researchers were two times more accurate at determining distances and three times more accurate at determining angles between specific Martian locations when they could look around from within the Martian scene.

And the researchers — many of them geologists who are used to working outside, in the field — found the tool very natural to use, as well.

"One of the interesting things that happened when scientists first used this is that they realized they could run up a hill to get spatial awareness of the scene," Jorritsma said. "So they immediately were able to start using it and thinking about it in a spatial way, as soon as they put on the devices."

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

"That was kind of our first clue that things were going in the right direction," Clausen added.

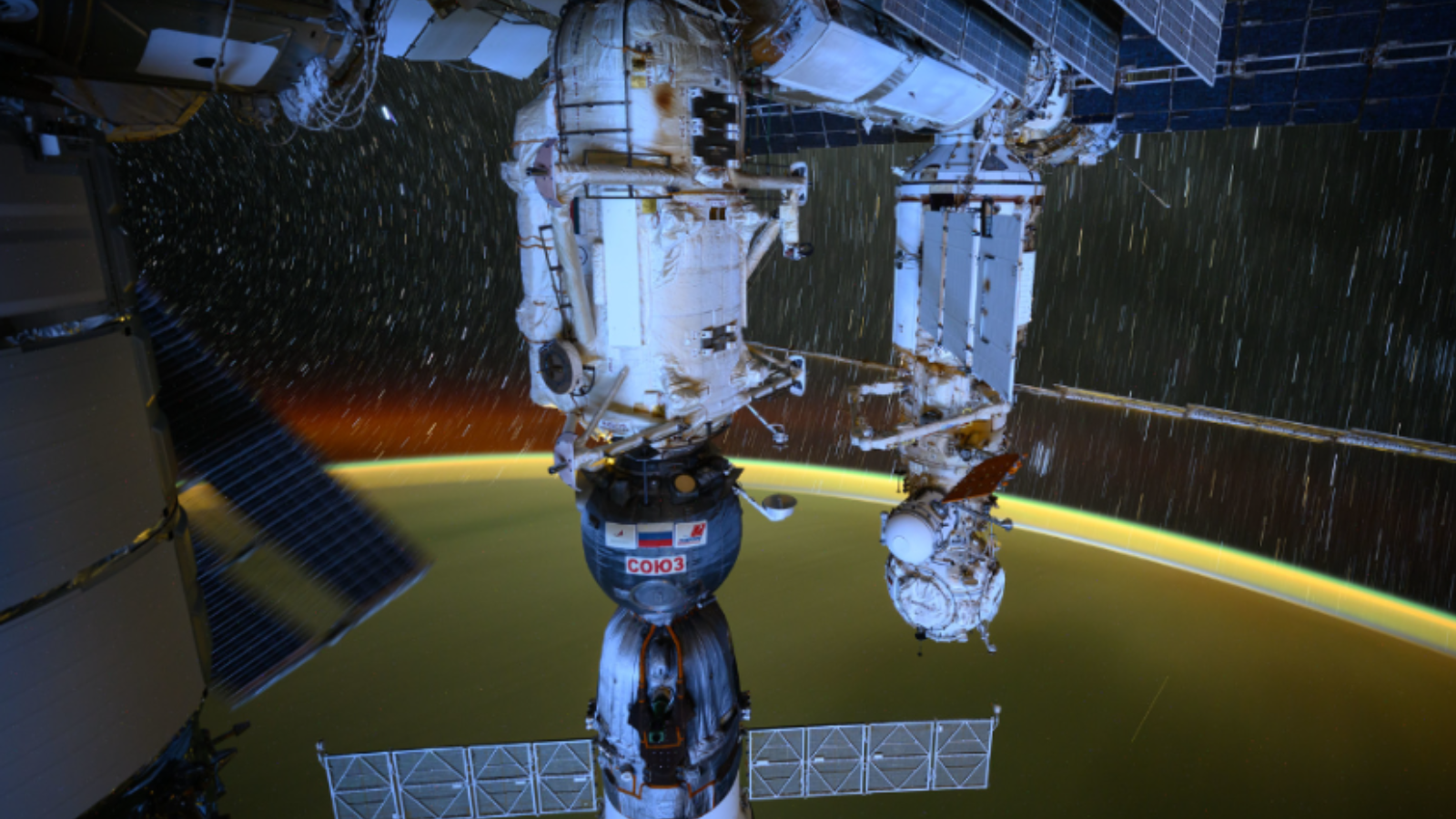

The two presenters also discussed a second application, called Project Sidekick, which offers the opportunity for experts to guide astronauts on the International Space Station through complicated procedures by watching the astronauts' actions and overlaying guidance, diagrams and extra information.

Although they didn't demonstrate that program live, the speakers shared photos and video of Project Sidekick tests: first on NASA's Extreme Environment Mission Operations (NEEMO), an underwater facility that simulates the space station — where researchers were led remotely through the steps of diagnosing and treating appendicitis, among other tasks — and then on a weightlessness-simulating plane. Finally, they tested it on the space station, where astronaut Scott Kelly communicated with the ground while wearing the set. (Kelly and British astronaut Tim Peake also played a virtual alien-zapping game with the headsets.)

With HoloLens guidance, aquanauts on NEEMO were able to perform activities in just an hour instead of the 4 hours it took them when they used written procedures. On the station, where things like opening hatches and putting out fires come with complicated, multistep procedures, Project Sidekick could be a major timesaver, Clausen said.

Third, the two demonstrated Protospace, which lets engineers explore detailed models of spacecraft and machinery as they're designed. Researchers have used Protospace to design the Surface Water and Ocean Topography satellite, which NASA plans to launch in 2020 to observe climate change's effect on the oceans, as well as NASA's Mars 2020 rover, which will collect a sample from the Martian surface to bring back to Earth, and a large orbiter to circle Jupiter's moon Europa. The speakers summoned a virtual Mars 2020, as depicted on the screen, and examined it from different angles — even enlarging it and dangling it over the audience's head.

"It transforms spacecraft design, in that it allows for a group of mechanical engineers to collaboratively visualize something in a true-to-scale and embodied fashion, which is something that they could never do before, unless they spent a lot of time and money doing a 3D print," Jorritsma said. "Everyone is in the room, usually, when they're using it, and they can gesture with their hand and everyone knows what they're talking about."

The tool lets researchers see how parts fit together better than in normal 2D modeling, and they can work on models together or practice tricky installation tasks. NYU engineering students this semester are helping to build a toolbar for the design interface.

"I can't wait for the day when we actually set foot on Mars," Clausen said. "There's actually not just going to be the astronaut walking around, but there will be millions of people here on Earth that are untethered from the limitations that they have, because it will be safe for them to fly above the surface and go ahead of the astronauts and actually help them gather the data." Such data would be gathered by orbiters — small satellites and rovers on the Martian surface, he clarified — but they could be combed through directly by people inspecting the surface virtually.

"It's not just going to be the people at JPL, or at the other space centers around the country and around the world, but we imagine a future in which it's actually all of you; anyone that has access to this immersive technology, libraries, in their schools, in their basements, all being able to participate in the exploration of these new worlds together," he added.

Email Sarah Lewin at slewin@space.com or follow her @SarahExplains. Follow us @Spacedotcom, Facebook and Google+. Original article on Space.com.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Sarah Lewin started writing for Space.com in June of 2015 as a Staff Writer and became Associate Editor in 2019 . Her work has been featured by Scientific American, IEEE Spectrum, Quanta Magazine, Wired, The Scientist, Science Friday and WGBH's Inside NOVA. Sarah has an MA from NYU's Science, Health and Environmental Reporting Program and an AB in mathematics from Brown University. When not writing, reading or thinking about space, Sarah enjoys musical theatre and mathematical papercraft. She is currently Assistant News Editor at Scientific American. You can follow her on Twitter @SarahExplains.