Why You Shouldn't Expect to See 'Blade Runner' Replicants Anytime Soon

Fans of the 1982 sci-fi-noir thriller "Blade Runner" had to wait more than a quarter-century for the follow-up film "Blade Runner 2049," which opened in U.S. theaters on Oct. 6. But they'll likely have to wait much, much longer to see any semblance of the films' human-mimicking androids — dubbed "replicants" — in the real world, experts told Live Science.

The original film was set in the year 2019, and "Blade Runner 2049" takes place just 30 years later. But although the realms of both films exist in the not-too-distant future, replicants represent astonishingly sophisticated technology compared to what is available today. These androids are practically indistinguishable from people — they move, speak and behave as humans do, and they are programmed to be autonomous, self-reliant and even remarkably self-aware.

Today's engineers and programmers have made great strides in robotics and artificial intelligence (AI) since the first "Blade Runner" movie debuted, yet the prospect of human-like replicants still seems as distant as it was 35 years ago. How close are we to developing robots that can pass for humans? [Recipe for a Replicant: 5 Steps to Building a Blade-Runner-Style Android]

For decades, programmers have worked to develop computer systems called neural networks. These systems form connections similarly to the way the human brain does and can be used to train a computer to learn certain tasks. And while computers may not yet be able to mimic a fully functioning human brain, they have shown a growing ability to "learn" to do things that were previously thought to be impossible for machines.

Knight takes rook

In 1997, an IBM computer named Deep Blue demonstrated for the first time that artificial intelligence could "think" its way to victory against a human chess champion. Capable of exploring up to 200 million possible chess moves per second, Deep Blue defeated chess champion Garry Kasparov in a six-game match played over several days. In beating Kasparov, Deep Blue showed that computers could learn to make complex and strategic choices by referencing a vast database of potential responses, according to IBM's website.

Another IBM computer, named Watson, took on an even more complicated task in 2011, competing against human contestants on the television quiz show "Jeopardy!" and besting two previous champions. Watson's "brain" was more sophisticated than Deep Blue's, addressing questions posed in natural language and presenting answers drawn from data input that spanned months prior to the competition, according to IBM.

Then, in 2016, AI gameplay got a significant upgrade when an AI system called AlphaGo defeated a human player in a game of Go, thought by many to be the most complicated strategy game ever invented by humans. AlphaGo learned to become a master player by "watching" millions of games and using two types of neural networks: one to evaluate the status of a game, and one to determine its next move, the programmers explained at a news conference that year.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Recently, neural networks even enabled computers to explore artistic pursuits, such as composing a holiday song, creating images of dinosaurs made entirely out of flowers and churning out five chapters of a "novel" continuing the "Game of Thrones" saga.

Living is harder

But sci-fi stories rarely explain what's going on under the hood of an android, and passing for "human" is harder than it looks. For a neural network to coordinate realistic physical activity in a robotic body alongside interactions that correctly use emotional inflection and social nuances, it would require programmers to input massive quantities of data, and would need processing capabilities far beyond those of any AI around today, Janelle Shane, an electrical engineering researcher who trains neural networks, told Live Science. [Machine Dreams: 22 Human-Like Androids from Sci-Fi]

"The world is so varied — that's one of the difficulties," Shane said. "There are so many things that a neural network can encounter."

"You can train a neural network to be reasonably good at simple tasks, but if you try to get them to do a lot of different tasks at once — speaking, recognizing an object, moving limbs — each of those is a really tough problem. It's hard to anticipate what they can encounter and make them adapt to that," she said.

Shane has programmed neural networks to do things that sound fairly simple, compared to a replicant's repertoire: generating names for paint colors or guinea pigs, or assembling spells for the role-playing game "Dungeons and Dragons" (D&D). For the spells experiment, Shane incorporated a database of 1,300 examples to teach the neural network what a D&D spell is supposed to sound like. Even so, some results were noticeably odd, she told Live Science.

"I had a whole series of spells — I'm still not sure why — that all revolved around the word 'Dave,' which was not in the original data set," Shane said. The spells, which are listed on her website, include "Chorus of the Dave," "Charm of the Dave," "Storm of the Dave" and "Hail to the Dave." There was also a Dave-less but still perplexingly named spell, "Mordenkainen's lucubrabibiboricic angion."

"It was a success in terms of being entertaining, but it was not a success in terms of sounding like the wizards who are inventing D&D spells," Shane said.

The body electric

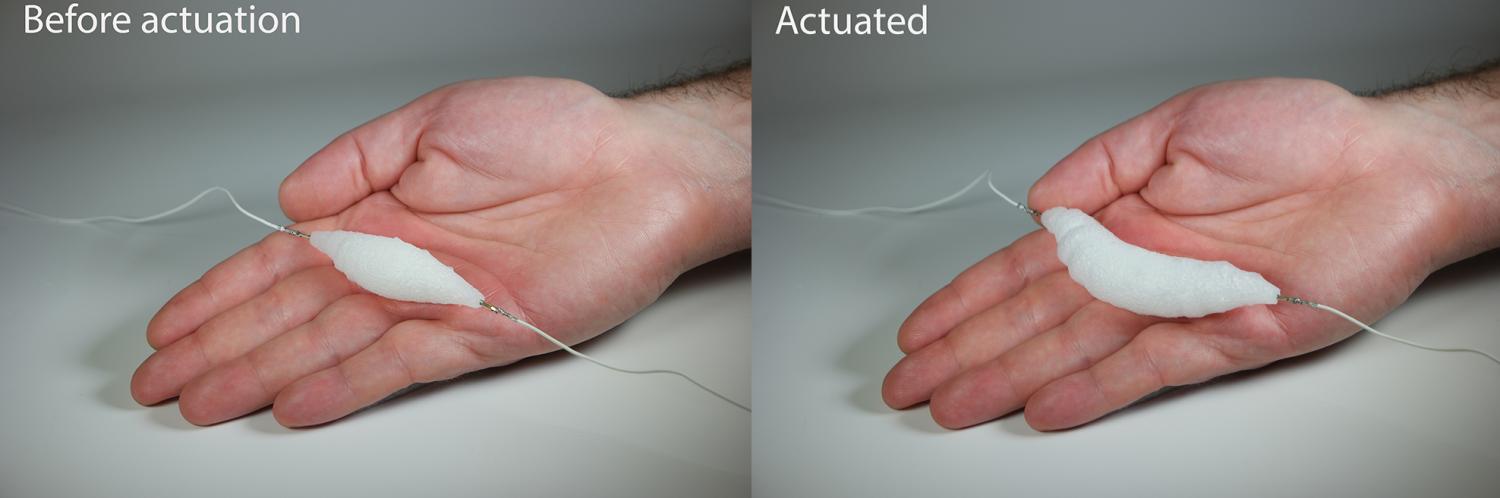

In recent decades, there also have been advances in designs of humanoid, bipedal robotic bodies, though they are still a far cry from the human-like replicants. However, recent design innovations offer the possibility of integrating more soft parts into robots to help them move more like people do, said Hod Lipson, a professor of mechanical engineering and data science at Columbia University in New York.

"We have been stuck in this corner of what's possible in robotics, simply because we've only had access to stiff, rigid components: hard motors, metal pieces, hard joints," Lipson told Live Science. "But if you look at biology, you see that animals are mostly made of soft materials, and that gives them a lot of capabilities that robots don't have."

Lipson and his colleagues recently designed a 3D-printed soft "muscle" for robots — a mechanism for movement control known as an "actuator," Lipson said. Made of synthetic materials, the actuator is flexible, electrically activated and about 15 times stronger than a human muscle. An actuator such as this, which can move in response to a stimulus, was "almost a missing link when it comes to physical robotics," Lipson said.

"We've solved a lot of other things, but when it comes to motion, we're still fairly primitive," he said. "It's not that the actuator that we've come up with is necessarily going to solve everything, but it definitely addresses one of the weak points of this new kind of robotics."

Over the past decade, strikingly lifelike robots cloaked in artificial skin have appeared briefly at conferences or have been tested in studies, but there's a good reason why we haven't seen any walking the streets — let alone performing the spectacular acrobatic feats demonstrated by replicants, Lipson said.

"Robots really can't handle unstructured physical environments very well. There's a huge gap there," he said.

"In many of these movies, we're somehow skipping the question of how you make these machines in reality," Lipson added. "No one has any clue how to make a machine that's nimble, that can store power inside and walk for days.

"If I had to predict the future, I think we'll get to the human-mind level in AI very soon," he said. "But when it comes to the body, it will take another century."

Original article on Live Science.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.