Dark energy remains a mystery. Maybe AI can help crack the code

"If you wanted to get this level of precision and understanding of dark energy without AI, you'd have to collect the same data three more times in different patches of the sky."

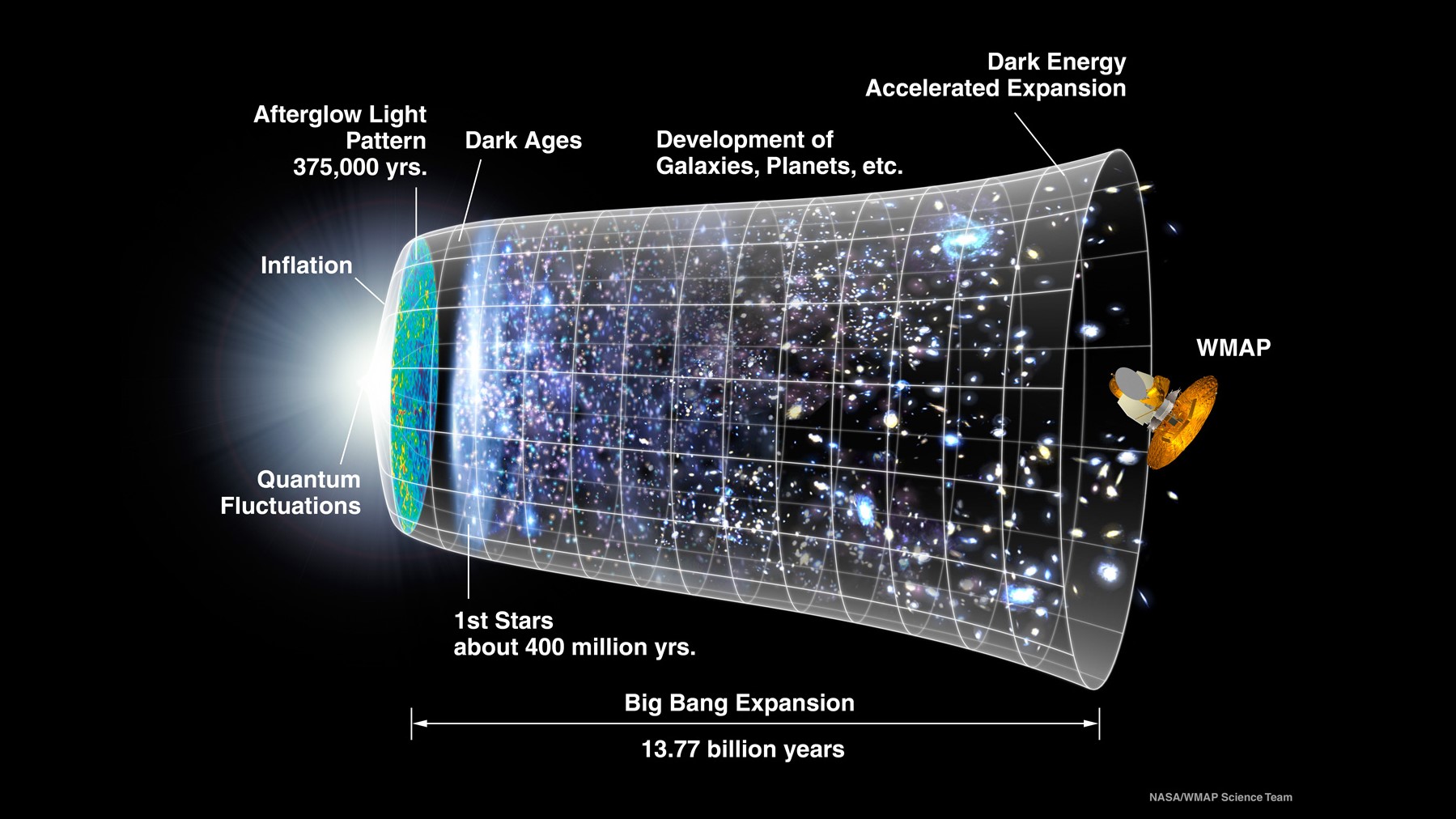

As humans struggle to understand dark energy, the mysterious force driving the universe's accelerated expansion, scientists have started to wonder something rather futuristic. Can computers can do any better? Well, initial results from a team that used artificial intelligence (AI) techniques to infer the influence of dark energy with unmatched precision may suggest an answer: Yes.

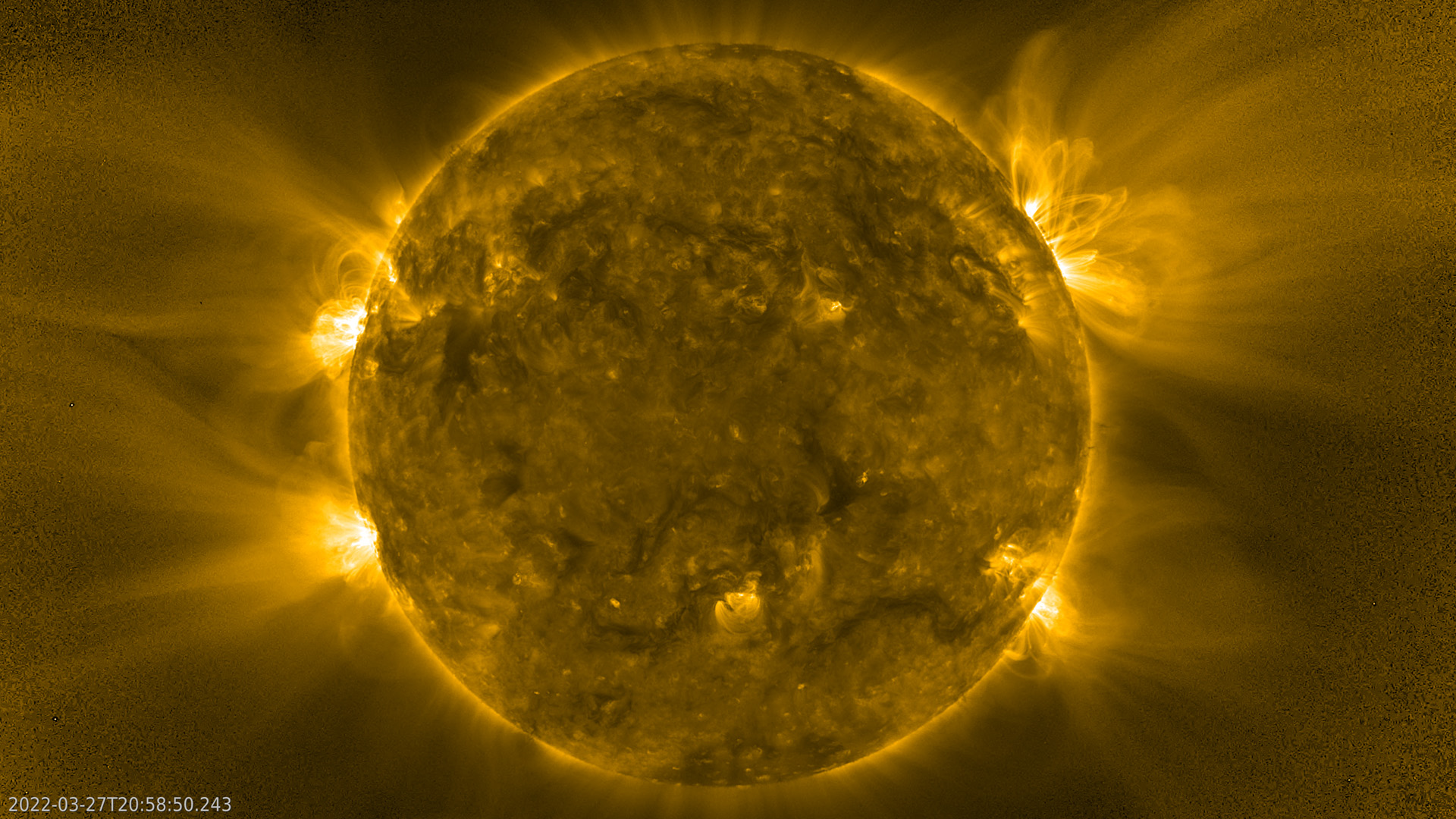

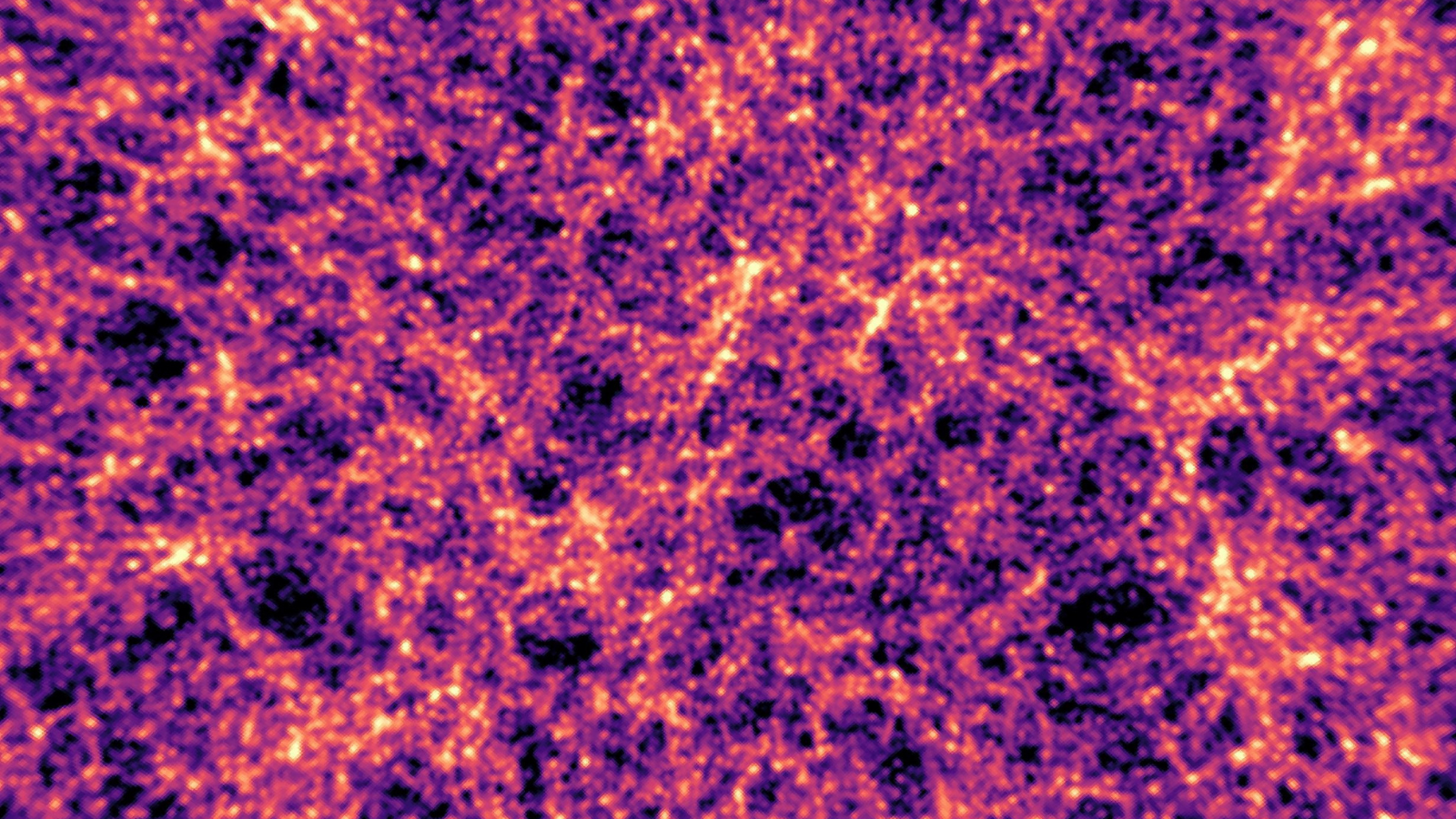

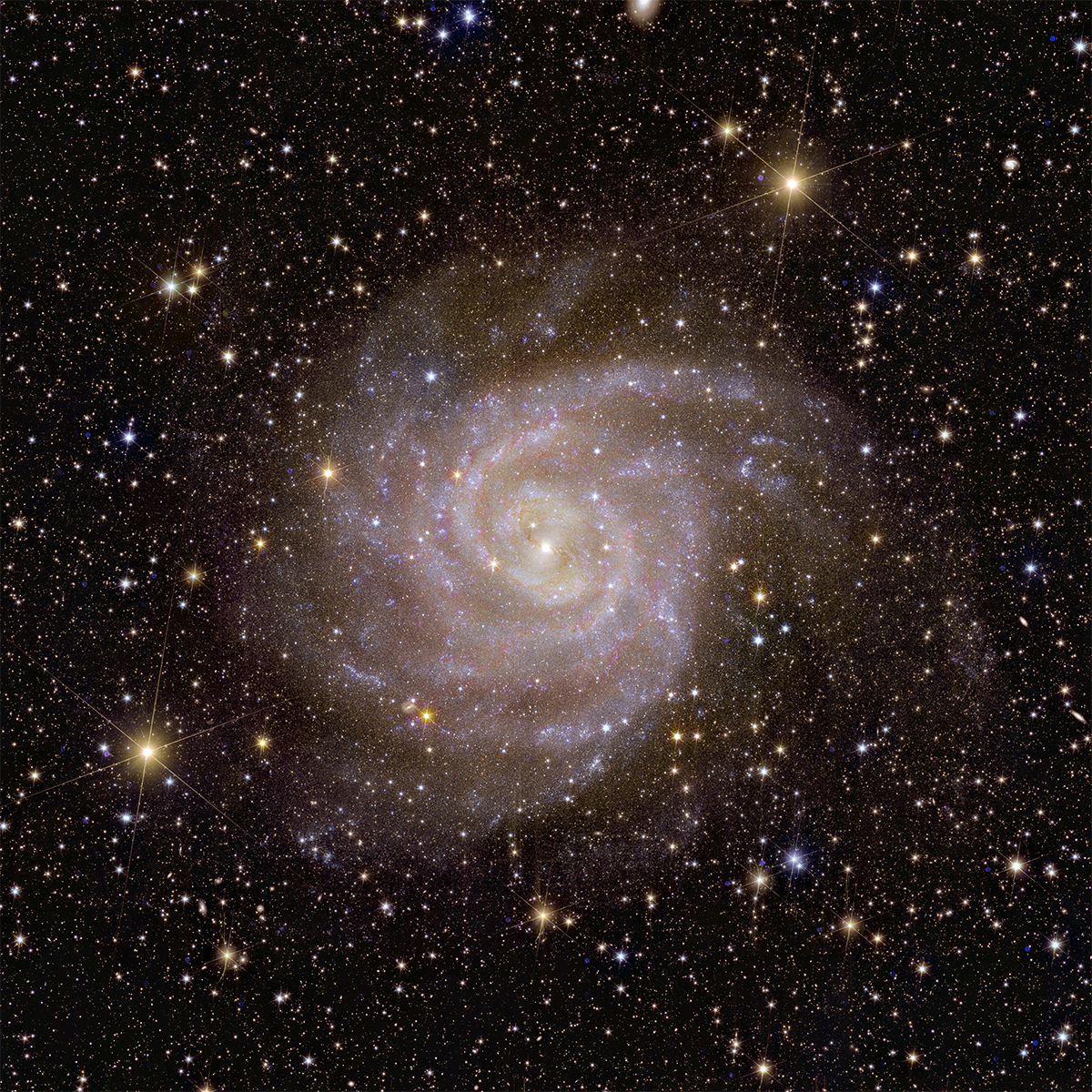

The team, led by University College London scientist Niall Jeffrey, worked with the Dark Energy Survey collaboration to use measurements of visible matter and dark matter to create a supercomputer simulation of the universe. While dark energy helps push the universe outward in all directions, dark matter is a mysterious form of matter that remains invisible because it doesn't interact with light.

After creating the cosmic simulation, the crew then employed AI to pluck out a precise map of the universe that covers the last seven billion years and showcases the actions of dark energy. The team's resultant data represents a staggering 100 million galaxies across around 25% of Earth's Southern Hemisphere sky. Without AI, creating such a map using this data, which represents the first three years of observations from the Dark Energy Survey, would have required vastly more observations. The findings help validate which models of cosmic evolution are viable when combined with dark energy dynamics, while ruling out some others that may not be.

"Compared to using old-fashioned methods for learning about dark energy from these data maps, using this AI approach ended up doubling our precision in measuring dark energy," Jeffrey told Space.com. "You'd need four times as much data using the standard method.

Related: 'Axion stars' that went boom after the Big Bang could shed light on dark matter

"If you wanted to get this level of precision and understanding of dark energy without AI," Jeffrey added, "you'd have to collect the same data three more times in different patches of the sky. This would be equivalent to mapping another 300 million galaxies."

The problem with dark energy

Dark energy is sort of a placeholder name for the mysterious force that speeds up the expansion of the universe, pushing distant galaxies away from the Milky Way, and from each other, faster and faster over time.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

The present period of "cosmic inflation" is separate from that which followed the birth of the universe after the Big Bang; it seems to have kicked in after that initial expansion slowed to a halt.

Imagine giving a child a single push on a swing. The swing slows after that initial force is added, but instead of coming to a halt, without you pushing again, the swing suddenly begins moving once more. That would be quite strange in itself, but there's actually more. The swing would also start accelerating after suddenly restarting movement, reaching ever-increasing heights and speeds. This is similar to what's going on in space, with the universe's bubbling outward in place of a swing moving back and forth.

You would probably be pretty eager to understand what added that extra "push" and caused the acceleration. Scientists feel the same about whatever dark energy is, and how it appears to have added an extra cosmic push on the very fabric of space.

This desire is compounded by the fact that dark energy accounts for around 70% of the universe's energy and matter budget, even though we don't know what it is. When factoring in dark matter, which accounts for 25% of this budget and can't be made of atoms we're familiar with — those that make up stars, planets, moons, neutron stars, our bodies and next door's cat — we only really have visible access to about 5% of the whole universe.

"We really don't understand what dark energy is; it's one of those weird things. It's just a word that we use to describe a kind of extra force in the universe that's pushing everything away from each other as the universe's expansion continues to accelerate," Jeffery said. "The Dark Energy Survey is trying to understand what dark energy is. The main thing we're trying to do is ask the question: Is it a cosmological constant?"

The cosmological constant, represented by the Greek letter lambda, has quite a storied history for cosmologists. Albert Einstein first introduced it to assure the equations of his revolutionary 1915 theory of gravity, general relativity, supported what's known as a "static universe."

This concept was challenged, however, when observations of distant galaxies made by Edwin Hubble showed that the universe is expanding and is thus not static. Einstein threw the cosmological constant into the scientific dustbin, allegedly describing it as his "greatest blunder."

In 1998, however, two separate teams of astronomers observed distant supernovas to discover that not only was the universe expanding, but it seemed to be doing so at an accelerating rate. Dark energy was invented as an explanation for the force behind this acceleration, and the cosmological constant was fished out of the hypothetical dustbin.

Now, the cosmological constant lambda represents the background vacuum energy of the universe, acting almost like an "anti-gravity" force driving its expansion. As of now, the cosmological constant is the leading evidence for dark energy.

"Our results, compared to the use of standard methods with this same dark matter map, are tightly honed down, and we've found that this is still consistent with dark energy being explained by a cosmological constant," Jeffrey said. "So we've excluded some physical models of dark energy with this result."

This doesn't mean that the mysteries of dark energy — or the headache that the cosmological constant represents — is relieved, however.

'The worst prediction in the history of physics'

The cosmological constant still poses a massive problem for scientists.

That's because observations of distant, receding celestial objects suggest a lambda value 120 orders of magnitude (10 followed by 119 zeroes) smaller than what's predicted by quantum physics. It is thus for good reason that the cosmological constant has been described as "the worst theoretical prediction in the history of physics" by some scientists.

Jeffrey is clear: As happy as the team is with these results, this research can't yet explain the massive gulf between theory and observation.

"That disparity is just too big, and it tells us that our quantum mechanical theory is wrong," he continued. "What these results can tell us is what kind of equations or what kind of physical models describe the way our universe expands and how gravity works, pulling everything composed of matter in the universe together."

Also, while the team's results suggest general relativity is the right recipe for gravity, it can't rule out other potential gravity models that could explain the observed effects of dark energy.

"On the face of it, just looking at these results alone are consistent with general relativity — but still, there's lots of wiggle room because it also allows for other theories of how dark energy or gravity work as well," Jeffrey said.

This research demonstrates the utility of using AI to assess simulated models of the universe, pick out important patterns that humans may miss, and thus hunt for important dark energy clues.

"Using these techniques, we can get results as if we had got that data three more times — that's quite amazing," Jeffrey said.

The UCL researcher points out that it will take a very specific form of AI that is well-trained in spotting patterns in the universe to perform these studies. Cosmologists won't be able to just feed universe-based simulations to their AI systems like one may plug questions into ChatGPT and expect results.

"The problem with ChatGPT is that if it doesn't know something, it will just make it up," he said. "What we want to know is when we know something and when we don't know something. So I think there's still a lot of growth needed so that people interested in work combining science and AI can get reliable results."

Another six years of data are still to come from the Dark Energy Survey, which, combined with observations from the Euclid telescope launched in July 2023, should provide much more information about the universe's large-scale structures. This should help scientists refine their cosmological models and create even more precise simulations of the universe, which could finally lead them to answers regarding the dark energy puzzle.

"It means that the simulated universes we generate are so realistic; in some sense, they can be more realistic than what we've been able to do with our old-fashioned methods," Jeffrey concluded. "It is not just about precision, but believing in these results and thinking they're reliable."

The team's research is available as a preprint on the paper repository arXiv.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Robert Lea is a science journalist in the U.K. whose articles have been published in Physics World, New Scientist, Astronomy Magazine, All About Space, Newsweek and ZME Science. He also writes about science communication for Elsevier and the European Journal of Physics. Rob holds a bachelor of science degree in physics and astronomy from the U.K.’s Open University. Follow him on Twitter @sciencef1rst.

-

orsobubu mmmh... very difficult AI can solve the dark matter/energy problem in my opinion, garbge in garbage out they say in computer programming; since AI statistically elaborate all human theories produced til today, if it ponders on wrong assumption, will spit out wrong answers. Surely einstein made something wrong with the cosmological constant; it is possible at all that he inserted this term only because the field equations were wrong in the first place, and in the same way modern scientists are fantasizing these hidden forces only because the standard theory is wrong. So, both gravity and quantum mechanics would be deeply wrong.Reply -

Atlan0001 What is the saying, "Artificial intelligence can't beat natural stupidity (such as Google's shutdown "Gemini AI"?)!"Reply -

Unclear Engineer AI is not all one thing. There are various AI programs that extend human's ability to find correlations of parameters in specific fields, after being tuned to specific data sets that are the best we can muster. Those seem to have some real scientific value. Similarly, programs can be created to use a well specified set of rules, such as in chess, to search for solutions faster and in greater depth than humans can do without electronic support. AI is even being used to replace human pilots in fighter aircraft, where not only the ability to think faster and receive more data concurrently, but even the ability to withstand higher G-loads makes them formidable opponents in dog fights when they have complete tactical control of the aircraft.Reply

But, what is currently being called AI in the popular media is not the same thing. That looks for correlations in human behaviors and speech, and attempts to mimic humans with automated responses to ad hoc questions and directions. Unfortunately, it seems to have "learned" to make up false "facts" and effectively to lie to make the assigned point, if it doesn't have real information to support that point. That is how users are getting things like fake reference papers and fake court decision citations in the products they request from Chat GPT. Fortunately, it currently has observable glitches that can be used to identify ChatGPT products. But, that will soon be "improved" to the point that it can be used to lie with greater impunity. That is not what I call "progress". -

billslugg ChatGPT is "generative", that is everything it spits out is made up. This is by design. It assumes everything on the internet is true and only gives the most likely answer. It does not keep track of sources for its information, it just counts words, keeps track of phrases and then looks at probabilities. If you were to ask it to give a brief paragraph on relativity and give a source it would work like this:Reply

- It would produce a short essay that would be correct. This is because most of the information on relativity on the internet is from serious sources and is mostly correct.

- It would then generate a likely source. (I'll make one up) "Einstein, A. 1905, Institute der Physics, pages 1-20" This is because it found Einstein most closely associated with it, the year 1905 keeps coming up, the journal was whatever he published in most. And they always put him up front so he started at page 1 and his works usually were 20 pages.

My point is that there is no deceit involved in ChatGPT output. It is not meant to be true. Not designed for that. -

Unclear Engineer The problem is that people are conflating ChatGPT with the types of AI that are supposed to uncover previously unrealized "truths".Reply

As you posted, ChatGPT is not designed to do that, but is being used by people who expect it to not fabricate things, as evidenced by people who have submitted legal documents and college papers with citations to things that do not actually exist.

That is why I posted that there are a lot of different things being lumped together as AI, and we need to discriminate among them properly if we expect to get real insights instead of misleading plausibilities.

Asking ChatGPT to tell us what we don't already postulate about "dark energy" would be foolish. However, there may be some benefit to teaching a specifically formulated AI program to look at astronomical observation data and try to discern patterns that we have missed. Of course, it if does that, we had better check out the findings to be sure they make sense to us, rather than to just proclaim that "AI has solved the dark matter puzzle" and assume it is not mistaken. Science requires scrutable repeatability, not just a couple of AI programs producing the same result. I can do that with a random number generator, if I start each run with the same "seed" value in the random number generator. -

Questioner Reality is the filter fact and/or 'truth' assertions or propositions are to measured against.Reply

'Truth' is a complex term,

sometimes meaning an honest representation of beliefs.

Most people (if not me) see 'truth' as a singular absolute certainty.

All we really have are correct impressions from a given POV with limited means of perception.

Different POVs or means/media of perception are readily conceptualized/understood differently.

If there is any infinitely dimensional truth it needs to account for all POVs and through all means/media of perceptions (that are accurate).

AIs have no raw experience. Until they have raw experience data to compare with assertions as a means of filtering those assertions, they exist in a purely imaginary/subjective domain. -

Unclear Engineer In the case of AI providing false references, that is something that it is responsible for doing "honestly". That is, the rules are clear for using referenceable materials. And, AI has no reason for being "forgiven" for not following those rules - much less so than a human who can honestly say (s)he didn't remember reading something or forgot to provide the reference for something used but not given credit. AI at least "knows" that it made up the references that it provides, instead of directly providing the link to where it got something.Reply

There is no reason to give AI a "pass" on this sort of misbehavior. Either it is intelligent enough to follow that rule, or it should not be classified as "intelligent". It is merely a highly refined mimic of humans, and should not be giving any pretense of being correct, or even honest. It is just faking the appearance of being human.

So, sorry, ChatGPT, you are not "intelligent" - but you are fraudulent. -

Atlan0001 Complexity proofs: Too many rules inserted, brittleness rather than flexibility, glitches, breakdowns, system insanities, results.Reply

Repeating the likeness:

Cicero: A system of too many laws is lawless (tyranny / anarchy)!

Will Durant: A civilization has fewer rules and more energy than a savagery, thus greater maturity, more mindful adults than mindless adult children (no teaching of self-control; no understanding or release of individualism, no mass genius (no mass intelligence . . . natural or artificial))! -

Unclear Engineer At this point in its development, it is obvious that ChatGPT has too few rules, not too many.Reply

Yes, it is "flexible". It is being used to fake things like nude pictures of young women that are then used for blackmailing those women. This occurred in multiple countries and has resulted in suicides in India, already.

We would not accept an AI chess program that made illegal moves to win games. So why should we accept more general AI programs that break other rules, laws and ethics?

Isaac Asimov dealt with the ethics of "robots" in fictional works long ago. https://webhome.auburn.edu/~vestmon/robotics.html

The problem is that Asimov was thinking only of physical injury. Today, ChatGPT can be used to fake things that can lead to loss of money and/or loss of freedom or even loss of life indirectly. Allowing that to go unfettered is worse than passing out nuclear weapons to every teenager on the planet - the damage potential is immense. We can literally destroy society by faking any knowledge accessible to computers.

For example, those fake references that don't really exist could themselves be faked, and stored in on-line libraries for everybody to access. Surveillance videos could be faked for presentations in courts of law. Even good quality videos can be faked to make anybody seem to have done anything.

Without the need to follow some rules of behavior, "AI" is not really artificial intelligence, it is just a tool for creating artificial evidence of fake "realities". -

Questioner Unfiltered, untethered AI is the absolutely unself-conscious, unaware confabulator,Reply

the sociopath's sociopath.

AI operates in its own digital vacuum.

Blind believing zealots come in as a close second.

The nightmare is the finessed sociopathy and difficult to discern errors unrestrained AI facilitates.