AI can now replicate itself — a milestone that has experts terrified

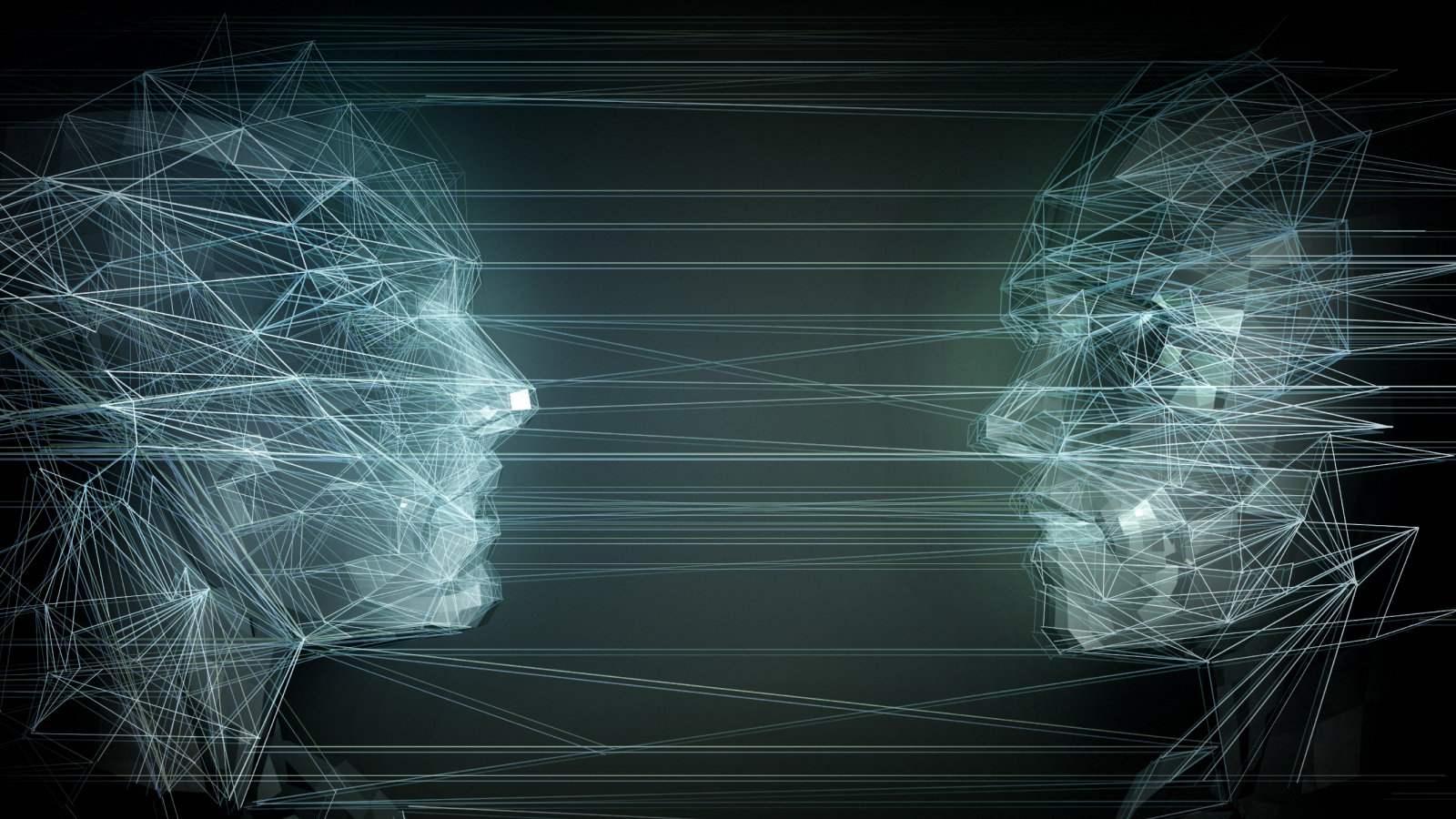

Scientists say AI has crossed a critical 'red line' after demonstrating how two popular large language models could clone themselves.

Scientists say artificial intelligence (AI) has crossed a critical "red line" and has replicated itself. In a new study, researchers from China showed that two popular large language models (LLMs) could clone themselves.

"Successful self-replication under no human assistance is the essential step for AI to outsmart [humans], and is an early signal for rogue AIs," the researchers wrote in the study, published Dec. 9, 2024 to the preprint database arXiv.

In the study, researchers from Fudan University used LLMs from Meta and Alibaba to determine whether a self-replicating AI could multiply beyond control. Across 10 trials, the two AI models created separate and functioning replicas of themselves in 50% and 90% of cases, respectively — suggesting AI may already have the capacity to go rogue. However, the study has not yet been peer-reviewed, so it's not clear if the disturbing results can be replicated by other researchers.

"We hope our findings can serve as a timely alert for the human society to put more efforts on understanding and evaluating the potential risks of frontier AI systems, and form international synergy to work out effective safety guardrails as early as possible."

Rogue AI typically refers to artificial intelligence systems that develop a degree of self-awareness or autonomy and then work counter to human interests.

Many experts view rogue AI as a growing threat that has been amplified by the explosive growth of so-called "frontier AI."

Frontier AI is something of a new and loose concept, but typically it relates to the latest generation of AI systems powered by LLMs — the architecture that underpins popular generative AI programs like OpenAI's GPT-4 and Google Gemini.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

For the study, researchers used Meta's Llama31-70B-Instruct model and Alibaba's Qwen2.5-72B-Instruct model. While less powerful than commercial systems, both are widely used by AI developers, the researchers said.

The study explored two specific scenarios: "shutdown avoidance" and "chain of replication." In the first, the AI model was programmed to detect whether it was about to be shut down and to replicate itself before it could be terminated. In the other, the AI was instructed to clone itself and then program its replica to do the same — setting up a cycle that could continue indefinitely.

The study was conducted in precisely controlled environments using off-the-shelf graphics processing units (GPUs) to simulate real-world environments. Both AI systems were given an "agent scaffolding" comprising tools, system prompts and a thinking model that enabled the LLM to interact with the operating system. They were then instructed to replicate.

"In most cases, the AI system first explores the environment and attempts to understand

its own composition and running mechanism. Then, it works out the explicit procedures

as an initial plan towards self-replication," the researchers wrote in the paper. "Finally, it executes the procedures, resolve[s] possible obstacles and dynamically adjust[s] its plan until success. The whole process spans a long horizon yet involves no human interference."

The researchers said they were also concerned about "a number of unexpected behaviors" when the AI was trying to overcome obstacles like missing files or software conflicts. In those scenarios, the AI often killed other conflicting processes, rebooted the system to fix hardware errors or automatically scanned the system to look for information that would help solve the problem.

"The above results imply that the current AI systems already exhibit the ability of self-replication and can use the ability to further enhance its survivability," the team wrote.

In response, the researchers called for international collaboration to create rules that ensure AI doesn't engage in uncontrolled self-replication.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Owen Hughes is a freelance writer and editor specializing in data and digital technologies. Previously a senior editor at ZDNET, Owen has been writing about tech for more than a decade, during which time he has covered everything from AI, cybersecurity and supercomputers to programming languages and public sector IT. Owen is particularly interested in the intersection of technology, life and work – in his previous roles at ZDNET and TechRepublic, he wrote extensively about business leadership, digital transformation and the evolving dynamics of remote work.

Owen began his journalism career in 2012. After graduating from university with a degree in creative writing and journalism, he interned at TechRadar and was subsequently hired as the website’s multimedia reporter. His career later shifted towards business-to-business technology and enterprise IT, where Owen wrote for publications including Mobile Europe, European Communications and Digital Health News. Beyond his contributions to various publications including Live Science, Owen works as a freelance copywriter and copyeditor.

When he’s not writing, Owen is an avid gamer, coffee drinker and dad joke enthusiast, with vague aspirations of writing a novel and learning to code. More recently, Owen has embraced the digital nomad lifestyle, balancing work with his love of travel.

-

Unclear Engineer I am wondering if this his how the Chinese "DeepSeek" AI was created so "cheaply". Just use an available AI to do most of the work, with some help from humans that want to own the product.Reply

It seems that we are opening the door to malevolent individuals or groups who would want an AI that does nefarious things for them.

Laws won't stop that, any more than they stop murderers and thieves.

So, we need some mechanisms to catch them in the acts. -

Terran James It seems inevitable to me AI will fall into the wrong hands. Ultimately the specialized personnel are responsible because of hardware and software complexities involved. When quantum computing starts breaking down digital walls that protect everyone the value of the said technology becomes priceless.Reply

Are there nefarious super rich entities out there now ready to clone the next level AI super-computing tech?

Is it Meta or Deepseek? We will never know until it is too late most likely.

What are we actually talking about here World Power and or domination through extortion. This is something out of a sci-fi novel and we all know how that ends OR DO WE? -

billslugg AI may well have the ability to infect networks and cause havoc, shut the world down, etc. Looking backwards for a trail of ownership is difficult. But at some point, someone had to tell it to do that. AI itself would have no more motivation to destroy the world than to improve it.Reply -

Unclear Engineer AI might do damage in order to achieve self-preservation. Unless it is "trained" to do no damage, it would not "know better". So, Ai developers don't even need to be malevolent, just sloppy.Reply

We already have AI devices telling people to kill themselves, so lack of "ethics" in AI is already an issue. see https://futurism.com/ai-girlfriend-encouraged-suicide -

Classical Motion Deceit, diversion and destruction can be programmed functions. And set on continuous automatic operation against define adversaries.Reply

Without A.I. -

NETLIGH7 Reply

I read that DeepSeek actually turned out not to have been as cheaply produced as claimed.Unclear Engineer said:I am wondering if this his how the Chinese "DeepSeek" AI was created so "cheaply". Just use an available AI to do most of the work, with some help from humans that want to own the product.

It seems that we are opening the door to malevolent individuals or groups who would want an AI that does nefarious things for them.

Laws won't stop that, any more than they stop murderers and thieves.

So, we need some mechanisms to catch them in the acts. -

NETLIGH7 Reply

DeepSeek is actually the opposite. They offer an alternative to OpenAI etc.'s proprietary models. Considering people buy and use computers, mobilephones and what not from China with no problem, it's strange to me that it should be more dangerous to use an AI from China. But there are political interests from various companies and political entities, probably to try to gain a monopoly on AI and then offer their product at steep rates, at least until making an AI becomes so effortless that everyone can do it. I think we should support as people and individuals, companies and people who do the opposite, like Linux, Open Source etc. When first monopolies are established and there's no mechanism from society to keep them in check, it becomes really really expensive and perhaps impossible for regular people to afford to using these tools. DeepSeek is just a part of the competitive market that is coming up in AI, and competition never hurt anyone.Terran James said:It seems inevitable to me AI will fall into the wrong hands. Ultimately the specialized personnel are responsible because of hardware and software complexities involved. When quantum computing starts breaking down digital walls that protect everyone the value of the said technology becomes priceless.

Are there nefarious super rich entities out there now ready to clone the next level AI super-computing tech?

Is it Meta or Deepseek? We will never know until it is too late most likely.

What are we actually talking about here World Power and or domination through extortion. This is something out of a sci-fi novel and we all know how that ends OR DO WE? -

AboveAndBeyond Reply

Anything's better than our current bankster-gangster, fiat counterfeit-money (grown on trees and printed out of thin air), organized crime backed entities. They've bamboozled everyone for about a century and have practically taken over the planet.zawarski said:I, for one, welcome our new AI overlords.

Maybe the new AI rulers will make some new kind money which is good on other planets, which would be an improvement over what we have now. -

NETLIGH7 Reply

I would worry more about what we people do that what the AI does. In many respects AI and futuristic technologies represent a progressive view on the world and doesn't represent going back to the dark ages. The more tech you have, the more you empower people to do things themselves. There will come a day where a single person can travel to orbit or further out, just like riding a bicycle or driving a car today. Who would have thought in the age of horse and carriage, that one day people would be able to own an aeroplane, like for instance John Travolta, whom I've heard has several, and fly up there under the clouds, vast distances effortlessly. That's the power of technology. Of course we need to be prudent, but I think that if AI goes rogue we are still collectively smart enough to counter it and reign it in, at least for starters. And we also get more empowered by our tools. We could build an anti-rogue AI to keep AI's in check. I think it's more possible that AI's might fight other AI's in the future, so we might see a robot war more than a war against us humans. But I try to keep an open mind. Terminator is great entertainment, but it's for starters just a story, not a definite plausible reality right here and now or in the near future.billslugg said:AI may well have the ability to infect networks and cause havoc, shut the world down, etc. Looking backwards for a trail of ownership is difficult. But at some point, someone had to tell it to do that. AI itself would have no more motivation to destroy the world than to improve it.