How AI is helping scientists unlock some of the sun's deepest secrets

"Our work goes beyond enhancing old images — it's about creating a universal language to study the sun's evolution across time."

Our sun cycles through its pattern of energetic activity every 11 years, but the technology scientists use to observe is advancing at a faster pace.

That's one of the take-home messages of a new study, which argues that artificial intelligence (AI) can bridge the growing gap between newer and older solar data and help scientists uncover overlooked aspects of our star's long-term evolution.

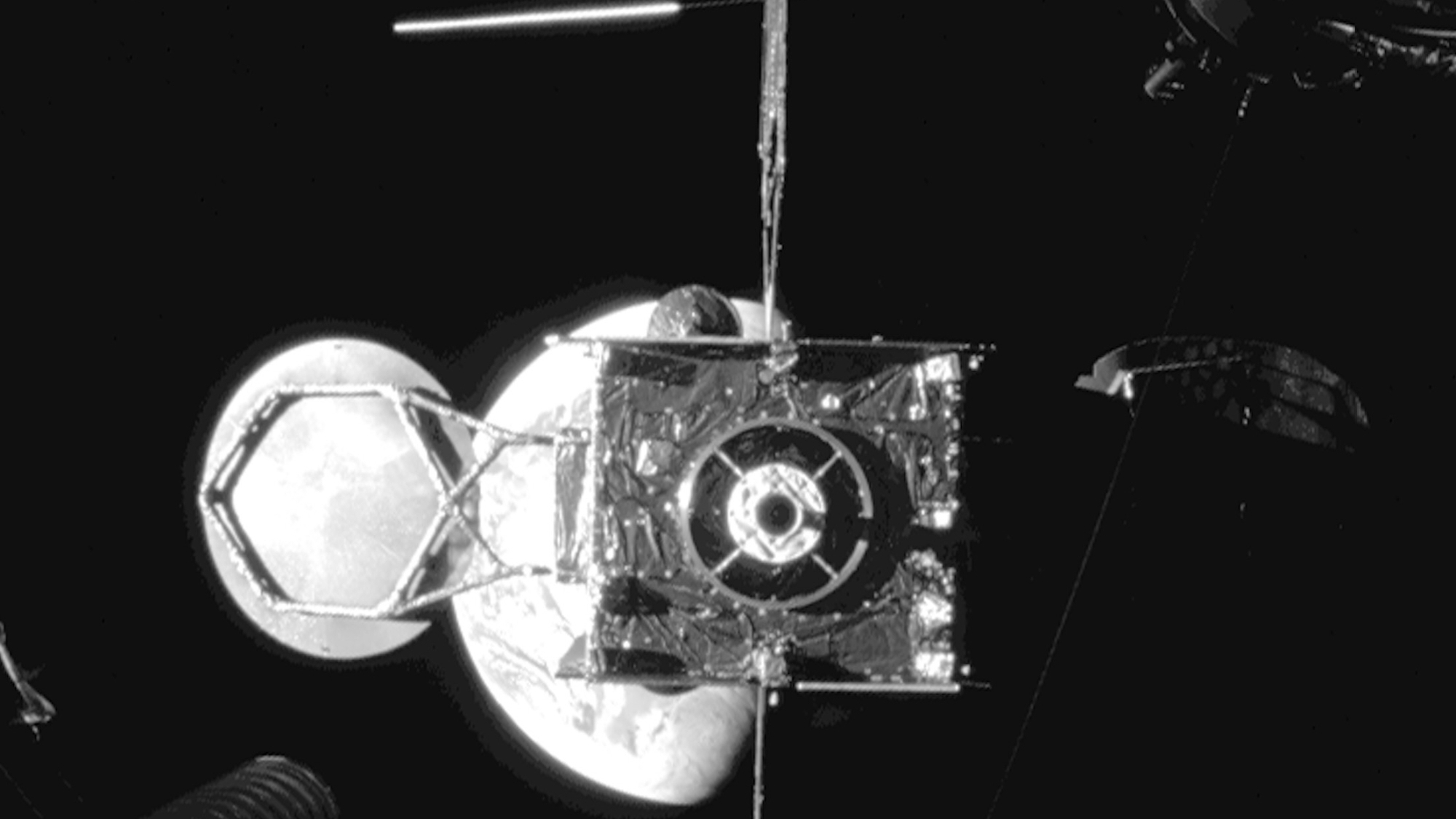

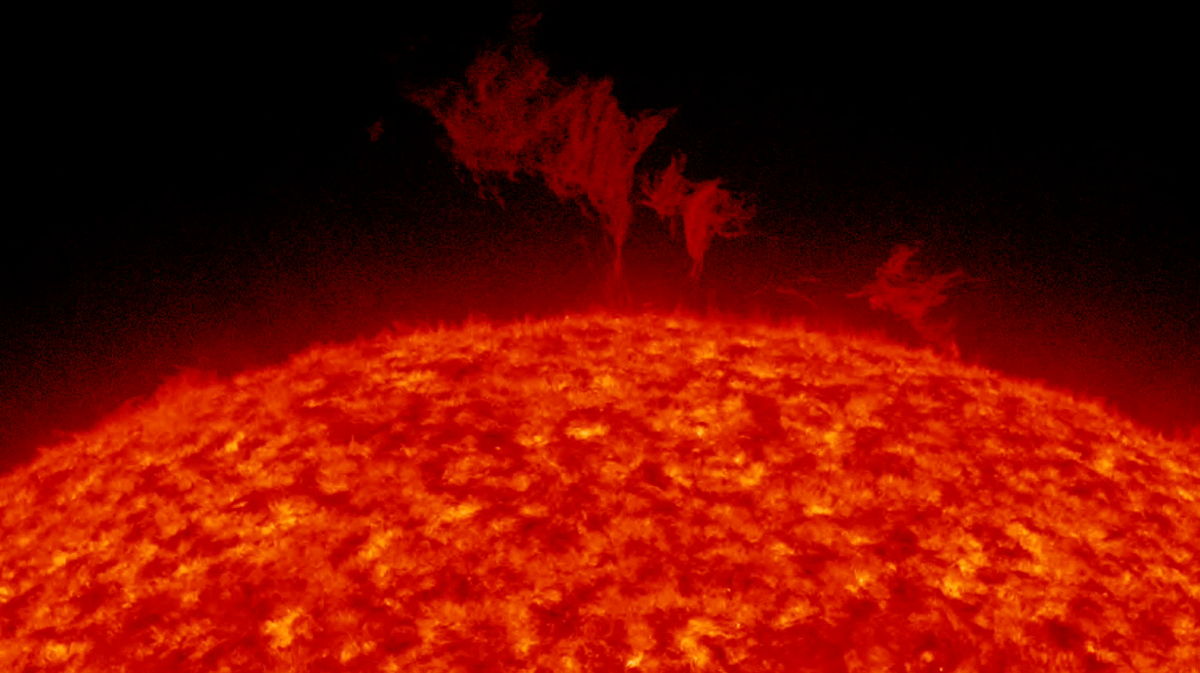

New generations of solar telescopes and instruments are constantly delivering unprecedented views of the sun. These advances, which allow scientists to capture intricate details of solar flares and map the sun's magnetic fields with increasing precision, are crucial for understanding its complex processes and driving new discoveries. Yet, data collected by each new instrument, while offering superior quality, is often incompatible with data from older ones due to variations in resolution, calibration and quality, making it tricky to study how the sun evolves over decades, the new study argues.

The new AI-based approach overcomes these limitations by identifying patterns and relationships within datasets from different solar instruments and data types, translating them into a common, standardized format. This provides scientists with a richer and more consistent archive of solar observations for their research, particularly for long-term analyses of historic sunspots, rare events and studies that require combining data from multiple instruments, the study authors say.

Related: Earth's sun: Facts about the sun's age, size and history

"AI can't replace observations, but it can help us get the most out of the data that we've already collected," Robert Jarolim, who develops advanced algorithms to process solar images at the University of Graz in Austria and led the new study, said in a statement. "That's the real power of this approach."

The AI method developed by Jarolim and his team can essentially translate observations from one instrument to another, even if those instruments never operated at the same time. That makes their data-driven approach applicable to many astrophysical imaging datasets, the new study suggests.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

The team achieved this through a two-step process involving neural networks, a type of machine learning algorithm loosely modeled on the human brain. First, one neural network takes high-quality images from one instrument and simulates degraded images as if they were taken by a different, lower-quality instrument. This allows the AI to learn the "damage" or systematic differences introduced by instruments.

A second neural network is then trained to take these artificially degraded images and "undo" the degradation, making them look like the original high-quality images again. In doing so, it learns how to correct for the differences between the two instruments, according to the new study.

Once the AI has learned how to "fix" the artificially degraded images, the second neural network can be used to improve the resolution and reduce noise in real, low-quality images collected by older instruments in a way that does not distort or remove the actual physical features of the sun that were present in the original data.

This AI framework allows older data to effectively benefit from the capabilities of newer instruments, enabling scientists to bring less detailed historical observations up to the quality of modern data, according to the statement.

"This project demonstrates how modern computing can breathe new life into historical data," study co-author Tatiana Podladchikova, of the Skolkovo Institute of Science and Technology in Russia, said in the same statement. "Our work goes beyond enhancing old images — it's about creating a universal language to study the sun's evolution across time."

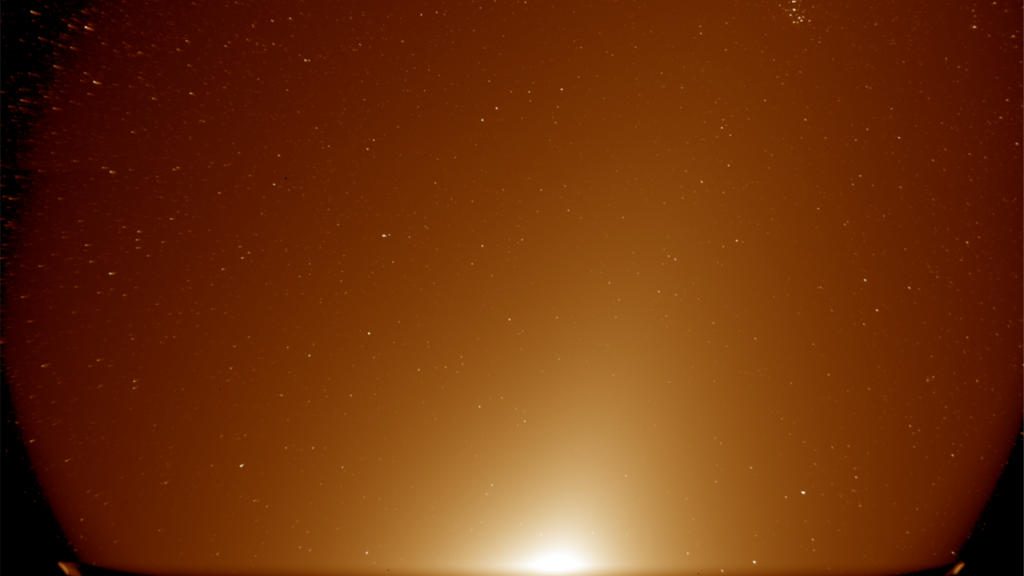

The researchers applied this technique to data collected by different space telescopes over two solar cycles, spanning a little over two decades. According to the statement, the approach improved the detail in full-disk solar images, reduced blurring and distortion in ground-based observations caused by atmospheric noise, and even estimated magnetic fields on the far side of the sun.

The team also applied the method to a sunspot (NOAA 11106) that was tracked for about a week in September 2010. According to the findings, the AI produced sharper and more detailed "magnetic pictures" of the sunspot that allowed scientists to see its magnetic structure more effectively than with the original data collected by the Solar and Heliospheric Observatory, a joint effort of NASA and the European Space Agency.

"Ultimately, we're building a future where every observation, past or future, can speak the same scientific language," Podladchikova said in the statement.

This research is described in a paper published April 2 in the journal Nature Communications.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Sharmila Kuthunur is a Seattle-based science journalist focusing on astronomy and space exploration. Her work has also appeared in Scientific American, Astronomy and Live Science, among other publications. She has earned a master's degree in journalism from Northeastern University in Boston. Follow her on BlueSky @skuthunur.bsky.social

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.