World's 2nd fastest supercomputer runs largest-ever simulation of the universe

The simulations will be used by astronomers to test the standard model of cosmology.

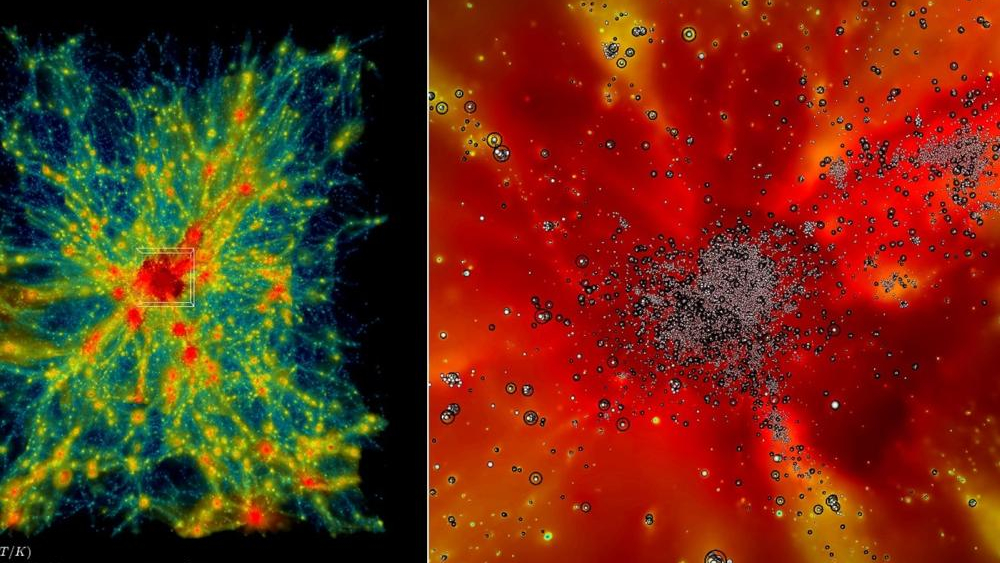

The world's second fastest supercomputer — it used to be the fastest, before its rival machine came online earlier this month — has created the most complex computer simulation of the universe to date. The goal of this simulation is to test what researchers describe as "cosmological hydrodynamics."

The supercomputer is known as Frontier, lives at Oak Ridge National Laboratory — and is a beast of a device. Built to be the first exascale supercomputer, it can perform up to 1.1 exaFLOPS, which is equal to 1.1 quintillion (10^18, or 1,100,000,000,000,000,000) floating-point operations per second. It's made from 9,472 AMD central processing units (CPUs) and 37,888 AMD graphics processing units (GPUs). (That's a staggering amount of both CPUs and GPUs). Frontier was the fastest supercomputer in the world until another supercomputer, named El Capitan and located at the Lawrence Livermore National Laboratory, overtook it with 1.742 exaFLOPS in November 2024, according to the AMD website.

A team from the U.S. Department of Energy's Argonne National Laboratory in Illinois, led by Argonnes division director for computational science, Salman Habib, used its Hardware/Hybrid Accelerated Cosmology Code (HACC) on Frontier. First developed about 15 years ago, HACC models the evolution of the universe, and its code was written so that it could be adapted for whatever is the fastest supercomputer at any given time.

HACC was initially deployed on petascale supercomputers (capable of quadrillions of FLOPS), which are less powerful than Frontier and El Capitan. For example, one project by the Argonne team was to use HACC to model three different cosmologies on the Summit supercomputer, a petascale computer that was the fastest in the world between November 2018 and June 2020. The three simulations were named after planets from the Star Trek universe: the Qo'nos simulation (named after the Klingon homeworld) modeled the universe using the standard model of cosmology (which involves calculations about both dark energy and cold dark matter); the Vulcan simulation included massive neutrinos, and the Ferenginar simulation (named after the Ferengi homeworld) explored a universe where dark energy wasn't constant, but changed over time. The results from the Summit simulations showed that when dark energy varies it can lead to stronger clustering of galaxies in the early universe — something astronomers could look for in high-redshift galaxy surveys, meaning galaxy surveys that look into pockets of the extremely distant universe.

Related: Largest-ever computer simulation of the universe escalates cosmology dilemma

In many ways, the resulting simulations are as large and detailed as a major deep astronomical survey, such as the Sloan Digital Sky Survey, or the forthcoming surveys to be conducted by the Vera C. Rubin Observatory.

However, the Summit simulations were "gravity only" simulations, aka they weren't powerful enough to include other forces or effects.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

"If we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you're talking about looking at huge chunks of time — billions of years of expansion," said Habib in a statement. "Until recently, we couldn’t even imagine doing such a large simulation like that except in the gravity-only approximation."

More powerful simulations to include other forces and effects besides gravity were needed, and that’s where Frontier, supported by a $1.8 billion Department of Energy project called ExaSky to fund exascale computing, comes in.

In the standard model of cosmology, two components dominate: dark matter and dark energy. The stuff that you and I are made from — so-called baryonic matter — makes up less than 5% of the matter and energy in the universe.

"So, if we want to know what the universe is up to, we need to simulate both of these things [dark matter and dark energy] … gravity as well as all the other physics including hot gas, and the formation of stars, black holes and galaxies," said Habib. He calls it "the astrophysical kitchen sink, so to speak. These … are what we call cosmological hydrodynamics simulations."

In order to prepare HACC for operating on Frontier, the requirements of the ExaSky project were that HACC must run at least 50 times faster on Frontier than it did on Titan, which was the world's fastest supercomputer in 2012 when HACC was originally being developed. During its dry run on Frontier, HACC blew that requirement out of the water, proving to be nearly 300 times faster.

Bronson Messer, who is the director of science at the Oak Ridge Leadership Computing Facility, credits HACC for adding the "physical realism of including the baryons and all the other dynamic physics that makes this simulation a true tour de force for Frontier."

The simulations, which will be made accessible to the astronomical community, will allow researchers to explore their cosmological models, asking questions about the nature of dark matter, the strength of dark energy, or pursuing alternative models of gravity such as Modified Newtonian Dynamics (MOND). The simulations can then be compared to what real astronomical surveys are finding, to determine which model best fits the observations.

Which all raises one fascinating philosophical question: If computing power continues to increase, our simulations of the universe will grow more detailed and accurate in parallel, but where does it end?

It has been suggested by some scientific thinkers that we ourselves might live in a simulation, perhaps as the product of somebody else's attempt to model reality. And if we're creating simulations while being inside a simulation, then perhaps it really is turtles all the way down.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Keith Cooper is a freelance science journalist and editor in the United Kingdom, and has a degree in physics and astrophysics from the University of Manchester. He's the author of "The Contact Paradox: Challenging Our Assumptions in the Search for Extraterrestrial Intelligence" (Bloomsbury Sigma, 2020) and has written articles on astronomy, space, physics and astrobiology for a multitude of magazines and websites.